What is Conversational AI?

Explore what conversational AI is, how it works, and how it has evolved. Understand the core technologies behind it and how they operate together in production environments.

Discover the different types of conversational AI along with real-world applications. Gain insight into deployment strategies, monitoring and optimization practices, 2026 industry trends, and the key considerations for building reliable, scalable conversational AI systems.

Conversational AI is a technology that allows computers to talk with people in natural language through text or voice. Instead of rigid commands or confusing menus, it understands what you mean, keeps context across turns, asks follow-up questions when needed, and can retrieve information or take actions like booking, rescheduling, troubleshooting, or tracking an order.

Imagine this: you’re running late for work and suddenly realize you need to reschedule a doctor’s appointment. Instead of calling a clinic and waiting on hold, you open an app and type, “Can I move my appointment to next Friday?” The system understands what you want, checks availability, asks a quick follow-up, and confirms the new slot.

Later that day, you ask the food delivery app “Where’s my food order?” and it replies, “Your order is out for delivery and should arrive in 10 minutes.” In the afternoon, you tell a voice assistant to reserve a restaurant table for your mother’s birthday next week. In the evening, you check the delivery status for her gift and get an instant update.

In all these cases, you’re not talking to a human but it feels close. These experiences are powered by conversational AI.

Conversational AI can be integrated with any website or app in the form of a virtual chatbot or a voice agent. Modern day AI chatbots like Amazon Rufus or voice assistants such as Siri are integrating conversational AI in its system to further humanize the conversation.

To do this, conversational AI follows a clear process. First, it understands your message. If you speak, then the system converts speech into text using automatic speech recognition (ASR). If you type, it directly processes the text. It then identifies your intent i.e. what you’re trying to do and extracts key details such as names, dates, locations, or amounts.

Next, the system decides how to respond. Based on your request, it may look up information from documents, databases, or APIs. It might execute a workflow, such as booking an appointment or resetting a password. If some information is missing, it can ask follow-up questions to clarify before continuing.

Once the decision is made, conversational AI generates a response in natural language. In chat-based systems, this appears as text. In voice-based systems, the response is converted from text into speech using text-to-speech (TTS). The reply is shaped to match the tone and style of the brand or use case, whether that’s professional, friendly, or neutral. Finally, it handles back-and-forth conversation.

Good conversational AI remembers context across turns. If you say, “Where is my order?” and then follow up with, “Can I change the address?”, it understands you’re still talking about the same order. It can adjust as users change their mind, or ask new questions.

Traditional vs. Modern Conversational AI

Conversational AI has come a long way, it has evolved and there’s a big difference between older systems and modern, LLM-based ones.

Traditional (pre-LLM) conversational AI works a lot like interactive forms hidden inside a chat. Developers define specific “intents” such as “check balance” or “change address,” and then build fixed flows: if the user says X, go to step Y, then ask question Z. These systems require lots of labeled examples for each intent.

The upside is that they’re very reliable inside the flows you’ve designed and easy to predict. The downside is that they break easily when users phrase things differently, go off-script, or ask unexpected questions. Expanding them is hard and expensive, often requiring heavy services or consulting just to add new intents or flows.

Modern, LLM-based conversational AI takes a different approach. It uses large language models (LLM) trained on huge amounts of text, similar to ChatGPT. These systems are much better at understanding messy, real-world language, handling different phrasings, and generating fluent responses on the fly.

The benefits are clear: they’re faster to launch, don’t require everything to be predefined, and can cover a much wider range of questions with more human-like conversations. However, they still need structure for serious tasks like workflows, tools, and information retrieval. They can also be inconsistent if prompts, models, or data change, which is why testing, guardrails, and grounding in real knowledge are essential.

In short, conversational AI is what makes it possible for computers to stop feeling like devices you command and start feeling like systems you can actually talk to.

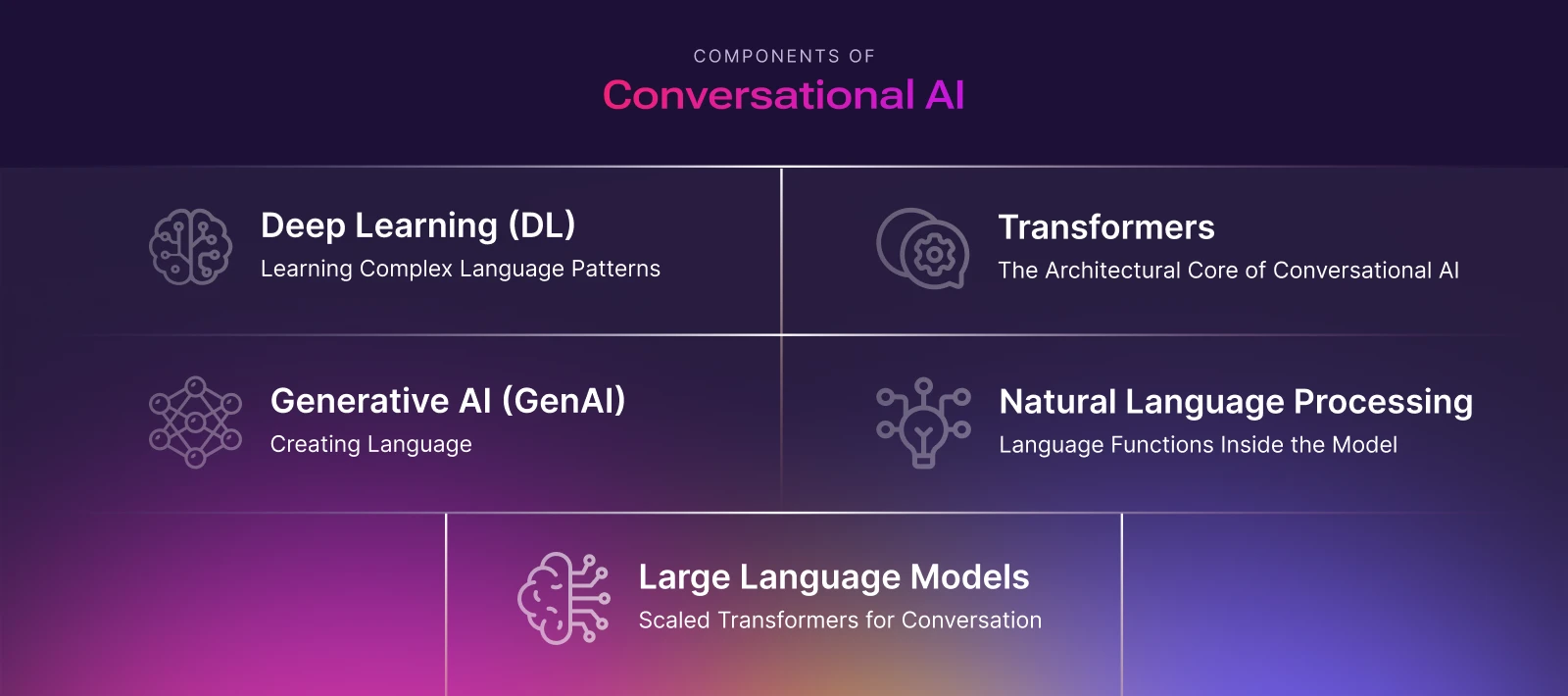

Key Components of Conversational AI

{{component}}

Conversational AI is not a single technology but a stack of interconnected capabilities that work together to understand human language, reason over context, and generate relevant responses. These components relate like nesting dolls: each layer builds on the foundations of the previous one, while sharing a common underlying architecture. Together, they form the intelligence layer of modern conversational systems.

At the core of this stack is deep learning, and more specifically, the transformer architecture, which unifies language understanding, generation, and long-context reasoning across text and speech.

Deep Learning (DL): Learning Complex Language Patterns

Deep Learning (DL) is a subset of machine learning that uses multi-layered neural networks to learn directly from large volumes of data such as text, audio, and images. In conversational AI, deep learning enables systems to move beyond rigid rules and statistical patterns to model the fluid, contextual nature of human language.

Earlier deep learning approaches for language such as recurrent neural networks (RNNs) and Convolutional Neural Networks (CNNs) struggled with long-range context and sequential dependencies. Modern conversational AI overcomes these limitations through transformer-based deep learning, which can process entire sequences in parallel and relate distant parts of a conversation effectively.

As a result, nearly all state-of-the-art conversational capabilities today such as speech recognition (ASR), language understanding and reasoning (LLMs), response generation, and speech synthesis (TTS) are powered by deep learning models built on transformer architectures.

Transformers: The Architectural Core of Conversational AI

Transformers are the core neural network architecture that tie together deep learning, generative AI, NLP, and large language models in modern conversational AI. Rather than processing language step by step, transformers use self-attention to evaluate how every part of an input sequence relates to every other part.

This allows conversational systems to:

- Understand meaning using global context, not just local word order

- Track references across long, multi-turn conversations

- Validate intent and meaning based` on prior dialogue and metadata

Transformers operate on sequences of tokens (words, sub-words, characters, or speech/audio features in ASR and TTS) that are converted into embeddings and refined through multiple stacked attention layers. At higher layers, each token's representation encodes rich semantic, syntactic, and contextual information. This architecture is what enables conversational AI systems to remain coherent, adaptive, and context-aware over time.

Generative AI (GenAI): Creating Language, Not Just Classifying It

Generative AI is a specialized application of deep learning focused on producing new content rather than merely analyzing or classifying existing data. In conversational AI, this means generating natural language responses dynamically instead of selecting from predefined scripts.

Transformer-based generative models learn a probability distribution over sequences that predict the next token based on all previous tokens and context. During a conversation, the model repeatedly generates one token at a time, conditioning each new step on the evolving dialogue history, instructions, and retrieved knowledge.

This mechanism allows conversational systems to:

- Explain concepts in natural language

- Summarize information on the fly

- Adapt tone and detail to each user interaction

Natural Language Processing (NLP): Language Functions Inside the Model

Natural Language Processing (NLP) is the discipline focused on enabling machines to work with human language. Traditionally, NLP systems were composed of separate modules: intent classifiers, entity extractors, sentiment analyzers, and templated generators. These were often built using rules or lightweight statistical models.

In modern conversational AI, these functions are largely unified inside transformer-based models. Rather than treating understanding and generation as separate steps, a single model interprets user input holistically, using conversation history, context, and metadata to infer meaning and produce responses.

Capabilities that once required distinct NLP pipelines such as intent detection, entity extraction, summarization, translation, and question answering, now emerge as behaviors of a shared transformer backbone. NLP no longer sits beside the model; it happens within it.

Large Language Models (LLMs): Scaled Transformers for Conversation

Large Language Models (LLMs) represent the most advanced class of transformer-based generative models. They are large-scale transformers trained on massive text corpora with objectives such as next-token prediction or masked-token reconstruction.

What distinguishes LLMs is not a fundamentally new mechanism, but scale:

- More parameters

- More data

- Longer context windows

At sufficient scale, transformer models exhibit emergent capabilities such as few-shot learning, long-range reasoning, instruction following, and coherent multi-turn dialogue. In conversational AI systems, LLMs act as the central "language brain" which interprets user input, reasoning over prior turns, and generating contextually appropriate responses in real time.

Older chatbots used separate NLU (Natural Language Understanding) systems to detect intent and extract entities. Modern conversational AI integrates this understanding directly into the LLM, which interprets user input using conversation history, metadata, and context.

For instance, if you say, "I'm late and might miss my appointment," the AI understands both the intent to reschedule and the implied urgency. It can respond naturally without requiring explicit instructions for every possible scenario.

In other words, the LLM "reads between the lines" like a human assistant who understands not only the words but also their meaning in context.

Transformers Across the Conversational AI Pipeline

Modern conversational AI systems are often multimodal, especially in voice-based experiences. Transformers play a central role across the entire pipeline:

- Automatic Speech Recognition (ASR) (Listening to the user): Transformer encoders model long-range temporal patterns in audio to convert speech into text. This is how the system "hears" what the user says.

- Dialogue and Reasoning (LLMs) (Understanding and deciding): Decoder-style transformers interpret the transcribed text, conversation history, and retrieved knowledge to decide what to say or do next, including calling tools and APIs.

- Text-to-Speech (TTS) (Speaking back to the user): Transformer-based TTS models generate prosody-aware intermediate representations that are turned into natural-sounding speech, allowing the assistant to respond in a consistent voice and style.

By using a shared architectural paradigm, conversational systems can maintain consistency, context, and control across listening (ASR), thinking (LLMs), and speaking (TTS).

Bringing It All Together

The technology used in conversational AI forms a clear hierarchy:

- Deep Learning: Neural networks for complex perception and language

- Transformers: The core architecture for sequence modeling and attention

- Generative AI: Models that create new content

- NLP: Language understanding and generation functions implemented within models

- Large Language Models: Scaled transformer models specialized for language

Together, they drive the intelligence of conversational AI systems. However, production-ready conversational AI in 2026 extends beyond models alone. These systems are integrated with orchestration layers, memory, safety mechanisms, and system controls that allow models to trigger actions, call tools, and operate reliably in real-world environments.

As a result, modern conversational AI is no longer purely reactive. By maintaining context, learning from behavior, and generating responses dynamically, it can anticipate user needs and offer timely assistance. For example, while a user is browsing a website's FAQs, a conversational assistant can proactively suggest relevant answers or shortcuts creating a smoother, more intuitive experience.

How Does Conversational AI Work?

{{orchestration}}

Modern conversational AI is a stateful, orchestrated system designed to understand users, maintain context, provide accurate answers, and even perform real-world actions like booking appointments or updating records.

At the core of the system is a large language model (LLM), supported by layers that manage conversation state, orchestrate workflows, retrieve knowledge, and connect to external tools. Together, these layers work like a team of specialists: one listens carefully (speech recognition), another remembers important details (orchestration layer), the third finds accurate information (RAG), and a fourth carries out tasks (tools/workflows). All this is coordinated to create a smooth, human-like experience.

Capturing and Preparing User Input

Every interaction begins with user input, which can be text or voice. When a user types a message, the system cleans and normalizes the text. This includes removing unnecessary spaces, detecting the language, and attaching metadata such as the user’s ID, the device used, the time of the message, and the platform. For example, if you type, “Can I reschedule my dentist appointment?” the AI knows who you are and the context in which you are asking.

If the user speaks, Automatic Speech Recognition (ASR) converts the audio into text. The system also detects when you finish speaking and assigns a confidence score to ensure the transcription is accurate. For instance, if you say, “I need to move my appointment,” the AI converts it to text and captures timing and details so it can respond reliably.

Think of this step like a receptionist who not only takes your message but also notes who you are, when you called, and how you reached them.

The Orchestration and State Layer (The Control Center)

The orchestration and state layer is the central command of the AI system. While the LLM is excellent at understanding and generating language, it doesn’t inherently manage conversation history, track task progress, or interact with external systems. The orchestration layer makes sure that every interaction is coherent, contextually aware, and goal-directed.

For example, if you asked the AI yesterday to book a dentist appointment and today you say or text to reschedule such as, “Actually, make it next Friday,” the orchestration layer allows the AI to remember your previous request, your preferences, and the current step of the booking workflow. This ensures that the conversation flows naturally without requiring you to repeat details.

The orchestration layer also decides how each message should be handled. If the user asks a simple question, like “What are the clinic hours?” the AI can respond directly using the LLM. If the request involves a workflow, such as “Reschedule my appointment,” the AI follows structured steps like verifying identity, checking availability, and confirming the new time. When the request requires a tool or API action, such as checking a calendar for open slots, the orchestration layer plans the external call and passes the results back to the AI.

Think of the orchestration layer as a project manager coordinating a team of experts. It remembers what has already been done, decides what each team member should do next, and ensures tasks are completed efficiently, while the LLM acts as the skilled specialist carrying out the responses.

Grounding Responses with Knowledge (RAG)

To ensure accurate, up-to-date answers, conversational AI often uses Retrieval-Augmented Generation (RAG).

For example, if you ask, “What documents do I need for a new appointment?” AI retrieves the latest clinic policies or internal documentation and uses that information to formulate a response.

Think of this as consulting a current manual or database before giving advice, rather than relying solely on memory. This ensures responses are both accurate and trustworthy.

Before responding, the AI receives a carefully structured prompt that contains:

- Its role and persona, such as “You are a friendly clinic assistant.”

- Conversation history and summaries of prior interactions.

- Knowledge retrieved from documents or databases via RAG.

- User-specific information, like past appointments or preferences.

- Business rules and safety instructions.

- The latest user message.

For example, if you ask, “Can I reschedule my appointment to next Thursday?” the AI sees your past booking, current availability, and scheduling rules. It then crafts a response that is accurate, context-aware, and policy-compliant.

This is similar to a chef following a recipe with all ingredients and instructions in front of them, ensuring the dish is perfect.

Taking Actions with Tools and Workflows

Conversational AI can perform real-world actions by connecting to external systems and following structured workflows.

For example, when rescheduling an appointment, the AI generates a request to check available slots. The system validates permissions and constraints, queries the calendar, and updates your booking. A workflow might involve greeting the user, collecting information, verifying identity, performing the action, summarizing results, and offering next steps.

This is like an assistant who not only understands your request but also executes it correctly while following rules and confirming the results.

Generating and Delivering Responses

Once the AI determines what to say, it delivers the response in the appropriate format:

- Text: The response is formatted, sensitive information is encrypted, and the message is sent.

- Voice: Text is converted into natural-sounding audio using Text-to-Speech (TTS). The system manages tone, style, speed, and interruptions so users can speak naturally while the AI responds.

For instance, if you say, “Change my appointment to Friday,” the AI might reply, “Sure! Your appointment is now Friday at 10 AM,” spoken in a clear, natural voice.

Monitoring, Testing, and Continuous Improvement

Modern conversational AI systems improve through structured observation, not passive learning. Every interaction is logged end-to-end: user inputs, ASR outputs, LLM prompts and completions, retrieved knowledge, tool calls, and final responses. These logs feed continuous monitoring across three dimensions:

- Quality metrics: Task completion, error and fallback rates, hallucination incidents, and user satisfaction (CSAT)

- Behavioral metrics: Escalation to human agents, retries, and abandonment or drop-off points

- Performance metrics: Latency, throughput, and infrastructure cost

Latency is treated as a core quality signal because it directly shapes how responsive the assistant feels. Teams monitor both end-to-end latency (user input to response playback/rendering) and component-level latency across ASR, orchestration/LLM reasoning, retrieval, tool calls, and TTS. For voice interactions in particular, sustained delays beyond roughly one second lead to interruptions, repeated inputs, or abandonment; even when answers are correct.

Scenario-based testing and regression suites ensure the assistant follows policies, maintains tone, and behaves correctly across edge cases without introducing latency regressions. When metrics drift, such as higher p95 latency after a model update or slower retrieval after a knowledge-base expansion teams can quickly pinpoint the bottleneck and adjust prompts, workflows, models, or infrastructure. This creates a continuous feedback loop that improves reliability and responsiveness over time.

Optimizing for Cost and Speed

Operating conversational AI efficiently at scale requires optimizing speed, quality, and cost together, rather than in isolation. Systems are designed around a practical latency budget that keeps interactions responsive while controlling compute spend.

Common optimization strategies include:

Model routing

- Simple, well-understood queries are handled by smaller, faster models

- Complex or high-stakes requests are routed to larger, more capable models

- Distillation and quantization are applied where possible to reduce inference time and cost

Context and retrieval efficiency

- Long conversations are summarized instead of passing full histories into every turn

- RAG retrieval is tightened i.e. fewer documents, shorter passages, faster indexes in order to reduce prompt size and response time

- Prompts are kept concise to avoid unnecessary attention and compute overhead

Pipeline and experience optimization

- Streaming ASR, generation, and TTS so users see or hear partial responses while processing continues

- Overlapping stages for example, starting LLM reasoning on partial ASR results

- Caching responses to popular questions to eliminate repeated inference entirely

For example, a question like “What time does the clinic open?” can be answered instantly using a small model or cached response. In contrast, rescheduling an appointment where factoring in availability, rules, and confirmation may invoke a larger model and multiple tools, accepting slightly higher latency in exchange for correctness and reliability.

By continuously measuring how each optimization impacts latency, answer quality, and cost, teams can make informed trade-offs and keep the assistant fast, trustworthy, and economical as usage scales.

Conversational AI in Action: A Working Example

Imagine calling a clinic to move your dentist appointment.

The AI first converts your voice to text using ASR. The orchestration layer retrieves your past appointments and workflow state. Knowledge about scheduling rules is fetched through RAG. The AI determines the next steps and interacts with the calendar system to find available slots. It confirms the new appointment, and TTS reads the confirmation aloud. If the request becomes complex, the conversation is escalated to a human agent with full context preserved.

This seamless experience works because orchestration, LLMs, knowledge retrieval, workflows, and voice technologies all function together like a well-coordinated team.

Conversational AI combines conversation memory, intelligent decision-making, accurate knowledge retrieval, and actionable workflows into a single, human-like system. Through monitoring and continuous improvement, it becomes smarter, faster, and more reliable over time.

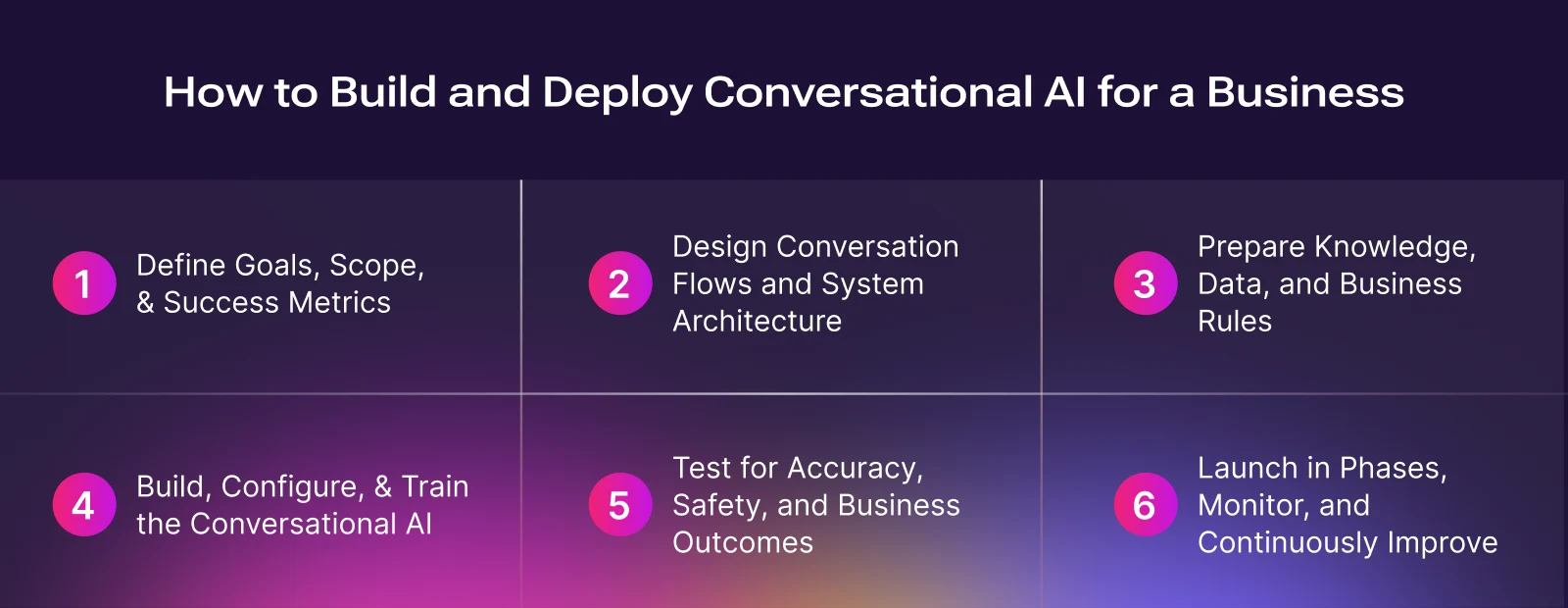

How to Build and Deploy Conversational AI for a Business

{{build}}

Building conversational AI for a business is not just about making a chatbot that can answer questions. In large organizations, conversational AI is rolled out in planned phases, across people, processes, and technology, with a strong focus on accuracy, safety, and measurable business outcomes.

Businesses typically use conversational AI in areas such as customer support (answering FAQs, tracking orders), sales and marketing (qualifying leads, helping users choose plans), internal operations (HR or IT helpdesks), and industry-specific use cases like healthcare scheduling or banking inquiries. Each of these has different risks, rules, and technical needs. For example, an e-commerce company may use chatbots for order tracking and returns, while a bank may restrict its bot to balance checks and card controls due to regulatory requirements.

Following is a practical 6-step guide that reflects how conversational AI is actually built and deployed in modern businesses.

Step 1: Define Goals, Scope, and Success Metrics

Before writing any code or choosing tools, the first step is to clearly define why you are building conversational AI and what problem it should solve.

Instead of trying to automate everything at once, successful teams start with narrow, repeatable, high-volume tasks. Examples include “Where is my order?”, “Reset my password”, or “Book an appointment.” These questions are common, follow clear rules, and are easy to measure.

At this stage, teams also define:

- Success metrics such as resolution rate, containment rate, response time, customer satisfaction (CSAT), or revenue impact.

- Guardrails, meaning situations where the AI must hand off to a human agent, such as complaints, compliance-related queries, or high-value customers.

Example

A telecom company may start with a goal like “Resolve 60% of billing-related questions without human agents within three months,” while routing cancellation requests directly to live support.

This step usually involves business teams, operations, and engineering working together, ensuring the AI supports real business goals rather than acting as a standalone experiment.

Step 2: Design Conversation Flows and System Architecture

Once the goals are clear, the next step is to design how the AI will interact with users and how it will work behind the scenes.

On the user side, this includes designing conversation flows i.e. what happens when a user asks a question, how the AI responds, and what options are shown next. These flows should be simple and predictable, similar to following a clear path in a well-designed app.

Example

If a user asks, “Where is my order?”, the AI may first ask for an order ID, then fetch the status, and finally offer next steps like “Track shipment” or “Talk to support.”

On the technical side, teams design the architecture, which often includes:

- A language model (LLM or NLU engine)

- Channels such as website chat, mobile apps, WhatsApp, email, or voice/IVR

- Integrations with backend systems like CRMs, order databases, billing systems, or identity systems

- Hosting decisions, such as cloud-based platforms or private deployments for regulated industries

For voice-based AI, most businesses use a cascaded setup: speech-to-text (ASR), followed by the LLM model, and then text-to-speech (TTS).

Example

A call-center voice bot converts a customer’s spoken question into text, checks order status using backend APIs, and reads the result aloud in a natural voice.

Many teams create a small prototype or proof of concept at this stage to validate the design before building the full system.

Step 3: Prepare Knowledge, Data, and Business Rules

Conversational AI learns and responds based on the information it is given. In businesses, this is less about raw training data and more about accurate knowledge and clear rules.

Teams collect existing materials such as:

- FAQs and help center articles

- Policy documents and product guides

- Internal standard operating procedures (SOPs) used by human agents

This information is cleaned, updated, and structured so the AI can reliably use it.

Example

A returns policy that spans multiple documents is rewritten into a clear step-by-step flow the AI can follow without guessing.

Critical processes such as refunds, authentication, or compliance-related answers are identified as “golden flows” and handled with extra care.

Rather than letting the AI guess answers, modern systems use retrieval-based approaches, where the AI looks up relevant information from approved documents before responding.

When a customer asks about refund eligibility, the AI retrieves the exact policy section instead of generating a generic response that would result in what is called “golden flows”.

Step 4: Build, Configure, and Train the Conversational AI

With goals, design, and knowledge in place, the AI can now be built.

Teams may choose different approaches depending on complexity:

- No-code or low-code platforms for simple workflows

- Pre-trained models accessed through APIs for faster deployment

- Custom frameworks for advanced control and deep integrations

Most teams start by building a Minimum Viable Product (MVP).

Example

An MVP for a travel company might only handle booking confirmations and flight status before expanding to cancellations and upgrades.

At this stage, teams also:

- Configure prompts and system instructions to guide tone and behavior

- Define structured workflows instead of relying on one large prompt

- Set up tool usage so the AI can fetch order details, update records, or trigger actions safely.

Step 5: Test for Accuracy, Safety, and Business Outcomes

Before launching, the AI must be tested thoroughly. This goes beyond checking if answers are correct. Teams design test scenarios using realistic personas and goals.

Example

A tester pretends to be an angry customer demanding a refund after the return window has closed, checking whether the AI follows policy and escalates correctly.

Automated tools simulate conversations to see whether the AI:

- Solves the task successfully

- Follows business rules and policies

- Uses the correct tone and avoids risky responses

- Escalates to humans when required

Regression testing is also introduced. After updating refund rules, teams rerun old test conversations to ensure unrelated flows, like order tracking, still work correctly.

Step 6: Launch in Phases, Monitor, and Continuously Improve

Launching conversational AI is not a one-time event. Most businesses roll it out in phases:

- Internal testing with employees

- Limited release to a small percentage of users

- Gradual expansion across channels, regions, or customer segments

Example

A retailer may first deploy the bot only on its website chat before adding WhatsApp and voice support.

Once live, the AI is continuously monitored. Teams track:

- Resolution and escalation rates

- Customer satisfaction and feedback

- Cost and response latency

- Failure patterns such as missing knowledge or broken integrations

- Safety and compliance incidents

If many conversations fail at the same step, teams review transcripts, update knowledge, or fix integrations in the next iteration cycle. Building conversational AI for a business is like onboarding a new team member at scale. You define its role, train it with approved knowledge, give it clear rules, test it carefully, and supervise its performance continuously.

When done correctly, conversational AI becomes a dependable business tool that results in handling routine work efficiently, supporting customers consistently, and freeing human teams to focus on complex, high-value tasks.

Why is Conversational AI an Important Conversation in 2026?

Modern conversational AI applications enable businesses to automate high-volume requests while maintaining quality and consistency. With rapidly advancing conversational AI capabilities, companies now face a strategic choice: integrate AI-driven conversations into their operating model or continue relying on legacy processes that can create a measurable disadvantage in speed, cost efficiency, and overall customer experience.

ROI: Clear Cost Savings and Better Returns

With automation handling routine customer questions, companies can reduce support team workload and lower operating costs. Gartner estimates that conversational AI deployments within contact centers will reduce worldwide agent labor costs by $80 billion in 2026.

AI also increases the value of existing customers. Automated reminders and follow-up messages lead to 19% more repeat purchases and a 12% increase in average order value.

The rapid market growth from $17.3B in 2025 to over $106B by 2035 shows that companies are investing heavily because conversational AI delivers solid, predictable ROI.

Productivity: Doing More With the Same Team

When you implement conversational AI to your workflow they take over repetitive, high-volume activities, enabling your team to redirect their time toward strategic conversations and higher-value responsibilities guided by a clear conversational AI strategy.

Organizations that adopt AI-assisted tools report up to 30% faster resolution times because conversational AI agents deliver instant suggestions, contextual insights, and automated responses.

Customer Satisfaction: Faster Responses and Better Experiences

Customers expect instant replies and the ability to respond to updates directly. In fact, 28% of consumers get frustrated when they can’t reply to a message from a business.

Conversational AI solves this by offering immediate, always-on support access across messaging channels. Fast and accurate responses improve First Contact Resolution, which has a big impact - just a 10% improvement in FCR can increase customer satisfaction by 15%.

Since 71% of consumers expect personalized experiences, AI helps businesses meet this demand by tailoring messages, recommendations, and support to each individual.

Growth: More Sales, Better Leads, and a Competitive Edge

Through interactive messaging, guided product recommendations, and smarter lead qualification via conversational AI, companies are driving more conversions and higher engagement.

- B2B teams using AI for lead qualification see 27% more booked demos.

- In-chat buying experiences make it easier for customers to purchase immediately.

What Are the Types of Conversational AI?

Conversational AI systems are best understood by looking at two dimensions:

- How users interact with them (text, voice, or telephony), and

- The role the AI plays (reactive assistant vs. in-context collaborator).

In practice, most conversational AI implementations fall into three primary interaction types: AI chatbots, voice assistants, and interactive voice assistants (IVAs). Within these interaction types, AI co‑pilots represent a specialized role: a workflow‑embedded assistant that actively helps users complete complex tasks inside specific tools or domains.

Organizations often deploy multiple types together to cover different customer and employee touchpoints. Below is a clear breakdown of each type, what it does, and where it fits.

AI Chatbots

AI chatbots are conversational software agents that interact with users primarily through text-based interfaces such as websites, mobile apps, and messaging platforms.

Beyond answering questions, AI chatbots are frequently integrated with backend systems such as CRMs, ticketing tools, e‑commerce platforms, so they can take action, not just converse.

Typical capabilities

- Answer FAQs and provide information (policies, product details, order status)

- Guide users through structured workflows (account opening, lead capture, returns)

- Execute tasks via APIs (password resets, bookings, updating records)

- Deliver personalized recommendations and cross‑sell or upsell products

Examples

- Website support bots: E‑commerce platforms like Amazon, Flipkart, and Myntra use chatbots to handle order queries, refunds, and delivery issues. Zalando uses chatbots for instant post‑purchase order tracking, freeing human agents for complex cases. Platforms like HubSpot and Shopify embed chatbots on pricing or support pages to pre‑qualify leads and resolve basic issues.

- Messaging and social bots: Banks, telecoms, and airlines deploy WhatsApp or Facebook Messenger bots for balance checks, bill payments, or boarding pass retrieval used widely by global airlines such as KLM and major telecom providers.

- Enterprise chat assistants: Customer service platforms like Freshworks, Zendesk, Salesforce, and Intercom embed chatbots to auto‑respond to tickets and assist agents with suggested replies.

Voice Assistants (General‑Purpose)

Voice assistants are general‑purpose AI assistants that interact primarily through spoken language. They are typically embedded in consumer devices such as smartphones, smart speakers, wearables, cars, and operating systems. Users explicitly invoke them often using a wake word to perform everyday tasks.

These assistants are optimized for breadth rather than depth: they handle a wide range of routine tasks but usually do not operate deeply inside a specific professional workflow.

Key characteristics

- Hands‑free interaction using wake words ("Hey Siri", "Alexa", "Hey Google")

- Broad task coverage across many domains and apps

- Deep integration with devices and services (contacts, calendars, smart home, media)

- Fast, multilingual speech recognition and synthesis for real‑time responses

Examples

- Consumer voice assistants: Amazon Alexa controls smart homes, plays media, and answers general questions. Google Assistant supports navigation, reminders, and search across phones, cars, and displays. Apple’s Siri handles calls, messages, reminders, and quick queries across Apple devices.

- Automotive voice assistants: In‑car assistants from BMW, Mercedes‑Benz, and Hyundai allow drivers to control navigation, climate, and media using voice, reducing distraction while driving.

AI Co‑Pilots (Workflow‑Embedded Assistants)

AI co‑pilots are a specialized type of AI assistant designed to work inside specific applications or domains. Unlike general‑purpose voice assistants, copilots are context‑aware collaborators that actively help users perform complex tasks as they work.

Rather than waiting for simple commands, copilots operate alongside the user suggesting next steps, generating content, summarizing information, or explaining decisions within the context of the tool being used.

Defining traits

- Embedded directly into a specific app or workflow (IDE, office suite, CRM, analytics tool)

- Narrower scope, but much deeper domain understanding

- Collaborative interaction style, often proactive

- Focused on improving productivity, quality, and decision‑making

Examples

- Productivity copilots: Microsoft 365 Copilot assists with drafting emails, creating presentations, summarizing documents, and analyzing data directly inside Office tools.

- Developer copilots: GitHub Copilot generates code, explains errors, and suggests improvements inside IDEs like VS Code, helping developers write and understand code faster.

In practice, copilots may use text or voice interfaces, but their defining feature is where they live and how they help, not the channel they use.

Interactive Voice Assistants (IVAs)

Interactive Voice Assistants (IVAs), sometimes called intelligent or virtual voice agents, represent the modern evolution of traditional IVR systems. Unlike legacy IVRs that force callers through rigid menus ("Press 1 for billing"), IVAs enable natural, free‑form spoken conversations over phone and contact‑center channels.

Modern IVAs are speech‑first systems powered by automatic speech recognition (ASR) and natural language understanding. While they may still support Dual-Tone Multi-Frequency (DTMF) keypad input for legacy compatibility, their primary strength lies in conversational, voice‑driven self‑service at scale.

Core traits

- Deployed on phones, contact center platforms, or voice channels in apps

- Allow callers to speak naturally ("I want to change my flight")

- Authenticate users, retrieve account data, and complete end‑to‑end self‑service tasks

- Seamlessly hand off context to human agents when escalation is needed

- Support 24/7, multilingual operations for global audiences

Examples

- Telecom and banking support: Banks and telecom providers use IVAs as the front line of their call centers, routing or resolving issues based on natural speech. Vendors such as NICE and Verint provide IVA platforms for these use cases.

- Healthcare, government, and utilities: Hospitals use IVAs for appointment scheduling and prescription refills, while public services and utilities deploy them for billing, outages, and service requests.

How They Differ and Where They Overlap

All conversational AI types differ in interaction channel, scope, and role.

Key Takeaway

A simple way to remember the distinction:

- Chatbots handle typed conversations on digital platforms.

- Voice assistants provide general, voice‑driven help across devices.

- Co‑pilots work alongside users inside specific tools to help them think, create, and execute better.

- IVAs live on phone lines and contact centers, automating spoken customer service at scale.

In real‑world deployments, these types often coexist. A single organization may use chatbots for digital self‑service, copilots for employee productivity, and IVAs for inbound calls. Together they can form a comprehensive conversational AI strategy across all touchpoints.

Applications of Conversational AI

Conversational AI has evolved from basic FAQ chatbots into agentic systems embedded within products and workflows. These systems maintain context, draw on long-lived memory, and take actions across real business systems to drive revenue growth, cost reduction, and improved customer experiences. Across industries, organizations are integrating conversational AI across customer service, sales, HR, financial operations, and health to automate routine tasks while enabling more proactive, context-aware engagement at scale.

Health, Wellness, and Digital Care

Conversational AI is increasingly acting as a continuous health and wellness copilot, reasoning over wearable data, behavioral patterns, and personal constraints to deliver real-time, personalized guidance. Unlike traditional health chatbots, these systems operate on longitudinal data streams and proactively intervene to improve outcomes and engagement, often augmenting human clinicians and coaches.

Key Applications

- Personalized health coaching: AI interprets biometric signals such as sleep, heart rate variability, and activity levels to deliver tailored guidance.

- Scenario-based health simulations: Users can ask “what if” questions (e.g., changing sleep schedules or training intensity) and receive predictions based on historical patterns.

- Early risk detection and triage: Conversational agents monitor trends across vitals and behavior, flagging concerning patterns and nudging users toward clinical support when needed.

- In-the-moment micro-coaching: Contextual advice is delivered based on current state, calendar, and physiological readiness.

Examples

- WHOOP Coach: Enables users to query months of biometric data conversationally, driving higher engagement and retention by explaining recovery, strain, and sleep trends rather than presenting static scores.

- ONVY: A precision health assistant that turns multi-device wearable data into daily conversational nudges; enterprise wellness deployments report higher adherence and sustained engagement compared to static health dashboards.

- Vora: An AI fitness coach that dynamically adapts training plans based on travel, recovery, and real-world constraints, helping users maintain consistency and measurable progress over weeks instead of months.

Customer Service

A Stanford and MIT study analyzing data from 5,179 customer support agents and three million chats shows that generative AI chatbots significantly improve agent effectiveness. The research highlights a 13.8% increase in successfully resolved chats per hour and faster skill progression for human agents. Modern customer service AI increasingly extends beyond reactive ticket handling to proactive issue detection, sentiment-aware routing, and full-context handoffs to human agents.

Key Applications

- 24/7 automated support: Conversational AI handles high-volume queries, reducing wait times and support load.

- Intent-based query resolution: AI identifies customer intent and resolves issues such as refunds, order status, technical troubleshooting, and account updates.

- Proactive issue detection and escalation: AI monitors interaction patterns and flags emerging problems before they escalate, handing off cases with full conversational history.

- Omnichannel support: Deployed across chat, voice, WhatsApp, and in-app channels with shared state and consistent responses.

Examples

- KLM Royal Dutch Airlines: Uses conversational AI to handle over 60% of customer inquiries, significantly reducing peak-season contact-center volume.

- AirAsia AVA: Automates flight changes, refunds, and travel information at scale, improving response times during disruptions.

- Moveworks: Customers report up to 57% of IT and HR issues resolved automatically, 96% routing accuracy, and a one-third reduction in mean time to resolution (MTTR).

- Yellow.ai: Enterprises report up to 85% instant query resolution and double-digit CSAT improvements across WhatsApp, web, and voice channels.

Marketing and Sales

AI agents automate lead qualification through always-on engagement, filtering prospects by budget, intent, and company size resulting in over 50% more qualified leads and a 60% reduction in operational costs. During live sales calls, conversational AI provides real-time coaching by analyzing sentiment and suggesting next-best actions, driving up to a 250% increase in lead-processing efficiency.

Key Applications

- Lead qualification: AI agents screen prospects and route high-intent leads to sales teams.

- Outcome-driven recommendations: AI reasons over customer goals and constraints to propose tailored solutions.

- AI-driven outbound calling and follow-ups: Automated voice agents handle demos, reminders, and re-engagement.

- Real-time sales coaching: AI analyzes conversations to surface objections and coaching opportunities.

Examples

- Gong: Customers see 15–20% faster deal velocity, 12–18% higher win rates, and 25–30% lower forecast variance.

- Avoma: Helps sales teams identify coachable moments across calls, reducing manual review time and improving rep performance.

- Murf AI Voice Agents: Automates outbound calling and follow-ups, improving booking rates while reducing human effort.

Human Resources

AI systems resolve up to 70% of routine HR queries, saving HR teams an average of 20 hours per week while delivering 24/7 employee support. Recruitment automation has reduced time-to-hire by 40–70%. Modern HR assistants increasingly operate over enterprise knowledge graphs, synthesizing information across internal systems.

Key Applications

- Employee onboarding: Policy explanations, document collection, and training delivery.

- Recruitment automation: Screening and interview scheduling.

- Internal IT/HR helpdesk: Payroll, benefits, access, and compliance queries.

- Enterprise knowledge assistance: Cross-system reasoning over policies and decisions.

Examples

- Accenture’s HR Concierge: Reduces HR ticket volumes across global operations.

- IBM’s Watson-based HR advisor: Supports workforce planning and attrition forecasting.

- Glean: Enables conversational access to internal knowledge, reducing time spent searching across tools.

- Moveworks (HR workflows): Resolves over half of HR requests automatically, improving employee satisfaction.

Banking and Financial Services

Banks use conversational AI to handle up to 80% of routine inquiries, achieving 30–45% reductions in operating costs and cutting manual KYC workloads by 40%. Digital onboarding times have dropped from over 20 minutes to under four minutes, while assistants increasingly support ongoing financial guidance.

Key Applications

- Transactional assistance: Payments, balances, and account management.

- Fraud alerts and risk management: Real-time detection and resolution guidance.

- Wealth advisory and financial coaching: Personalized advice and scenario-based planning.

Examples

- Bank of America – Erica: Has handled over two billion interactions, delivering millions in cost savings.

- HSBC’s Amy: Automates product and process inquiries, reducing call-center load.

- Kasisto: Powers conversational banking assistants that improve digital engagement and self-service completion rates.

- Cleo: Provides ongoing money coaching that drives higher user retention and healthier financial behavior.

Emerging Patterns Across Industries

Across industries, conversational AI is converging on shared design patterns: long-lived memory, multi-modal inputs, and agentic execution that completes tasks rather than merely offering advice. Increasingly, these systems adopt a coaching-oriented interaction style, signaling a shift from reactive chatbots to proactive, context-aware digital collaborators.

Future of Conversational AI: Technology and Industry Trends

Conversational AI in 2026 is no longer about shipping impressive demos or standalone chatbots. The direction is clear: the industry is moving toward production-grade conversational systems that are reliable, observable, and deeply integrated into real business workflows. Model quality still matters, but it is no longer the primary differentiator. What separates effective systems from fragile ones is how they are orchestrated, tested, grounded, and operated over time.

This shift becomes clearer when viewed through two lenses: what users expect from conversational AI, and what builders must do to meet those expectations.

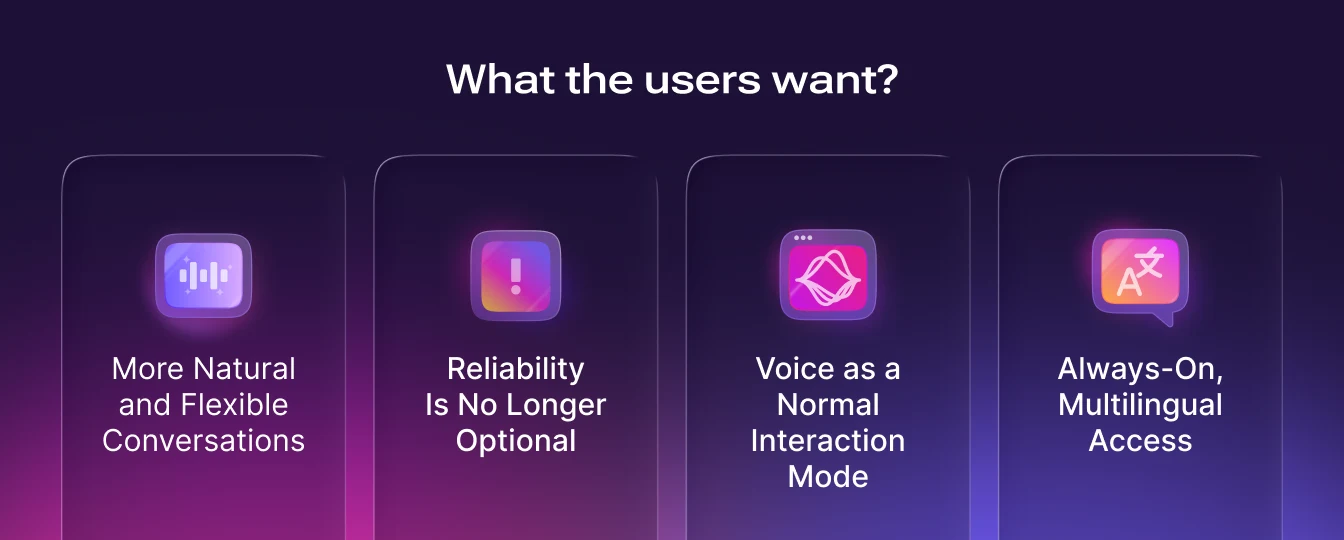

Conversational AI in 2026: User Point of View

{{users}}

More Natural and Flexible Conversations

From the user’s perspective, conversational AI is becoming less rigid and more forgiving. Instead of navigating static FAQ trees or narrowly defined intents, users can ask complex, multi-step, and loosely phrased questions and still receive useful responses. This enables experiences such as AI tutors and coaches, health report explainers, and product assistants embedded directly into websites, messaging apps like WhatsApp, or internal enterprise tools.

In many cases, the conversational interface itself becomes the primary product, especially in education, coaching, and guided self-service scenarios.

Reliability Is No Longer Optional

As conversational AI becomes more common, user tolerance for failure decreases. Users now expect fewer dead ends, consistent behavior when policies are involved, and clear escalation to a human when issues become complex or high-risk. An AI that sounds natural but behaves unpredictably quickly loses credibility. In 2026, reliability is not a differentiator, it is a baseline expectation.

Voice as a Normal Interaction Mode

Voice interactions are increasingly accepted in everyday workflows. Users are comfortable with voice agents handling outbound reminders, lead outreach, and simple inbound requests, provided the interaction feels responsive and respectful of conversational norms. Latency, interruptibility (barge-in), and speech quality matter as much as correctness. Poor voice performance breaks trust faster than text-based errors.

Always-On, Multilingual Access

Users also expect conversational AI to be available around the clock and in multiple languages. This is particularly transformative for SMBs and global organizations that previously could not afford 24/7, multilingual support. Conversational AI is becoming a core access layer for global communication rather than a premium feature.

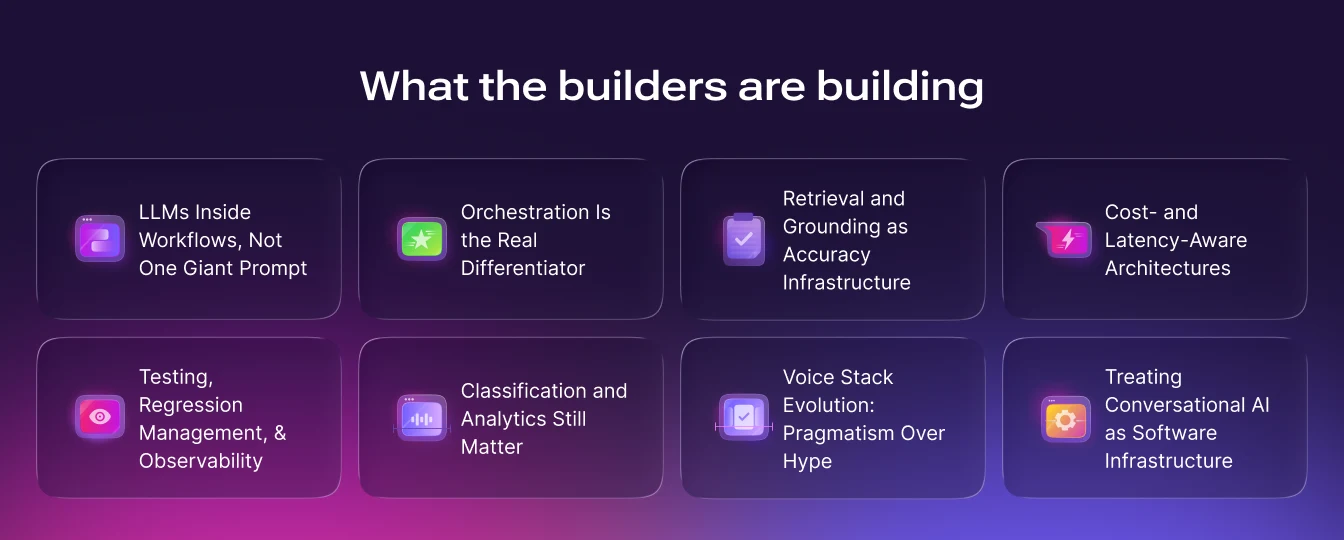

Conversational AI in 2026: Builder Point of View

{{builders}}

LLMs Inside Workflows, Not One Giant Prompt

A key lesson emerging in 2026 is what not to do: builders should avoid shipping a single, massive “god prompt” that attempts to handle every scenario. These systems are fragile, difficult to debug, and impossible to measure effectively. Small changes can introduce unpredictable side effects, and failures are hard to localize.

Instead, builders are moving toward explicit workflows or state machines, where the conversation is broken into structured steps such as identifying user intent, authenticating, retrieving data, proposing options, and confirming actions. Within each step, the LLM performs a bounded task, such as selecting the next state or generating a response using specific tools. This approach trades maximal cleverness for predictability, control, and debuggability.

Orchestration Is the Real Differentiator

By 2026, access to strong language models is largely commoditized. Choosing one frontier model over another is rarely a lasting competitive advantage. What truly differentiates systems is the orchestration layer around the model.

This includes how state is managed across turns, how tools are invoked, how fallbacks and escalation are handled, and how policies are enforced. Effective orchestration allows teams to reason about system behavior, measure outcomes, and ensure compliance even as models evolve.

Retrieval and Grounding as Accuracy Infrastructure

As conversational AI systems handle policy-driven and high-stakes interactions, retrieval quality becomes critical. Builders must design knowledge systems that go beyond simple vector search. This includes section-aware indexing, curated Q&A for sensitive policies, and deliberate grounding strategies to ensure responses remain accurate and auditable.

Without strong retrieval design, flexible LLM responses increase the risk of hallucinations and inconsistent policy enforcement, especially in domains like healthcare, refunds, and compliance-heavy workflows.

Cost- and Latency-Aware Architectures

Production conversational AI systems must actively manage cost and performance. Builders increasingly route simple queries to lightweight models while reserving stronger models for complex reasoning. Techniques such as caching, summarization, and context truncation are essential to maintain responsiveness and control operating costs.

These architectural choices are as important as model selection when deploying conversational AI at scale.

Testing, Regression Management, and Observability

Non-determinism is an inherent property of LLM-based systems. A change intended to fix one issue can silently break others. As a result, builders are investing early in scenario-based regression testing, where fixed conversational scenarios representing real user goals are re-run after every system change.

Beyond testing, observability has become a first-class concern. Teams monitor resolution rates, escalation frequency, drop-offs, safety signals, and customer satisfaction in real time. Judge models and rule-based checks are used to score task success, tone, and policy adherence. Optimization is driven by evidence, not intuition.

Classification and Analytics Still Matter

A common misconception is that LLMs eliminate the need for classification. In reality, builders still need to bucket conversations by type, outcome, and risk level to understand where systems fail and where to invest improvement effort. Without segmentation, averages hide critical weaknesses. Classification enables targeted fixes, better prioritization, and measurable progress.

Voice Stack Evolution: Pragmatism Over Hype

In 2026, most production voice systems rely on cascaded architectures that combine speech recognition, language models, and text-to-speech. This approach remains preferred for accuracy, control, and debuggability, particularly in bookings, payments, and compliance-heavy workflows. End-to-end speech models are promising, but they are harder to debug and less predictable. They are more likely to succeed first in unconstrained, experience-first scenarios such as coaching or outbound engagement.

Treating Conversational AI as Software Infrastructure

Organizations are increasingly structuring conversational AI as a core software system rather than a one-off project. Business operations teams define SOPs, policies, and escalation rules. Engineering and AI teams own orchestration, integrations, testing, and observability. Conversation designers focus on tone, trust, and sensitive flows. This clear ownership enables repeatable deployments and reduces dependence on heavy professional services.

A common pattern among successful teams is to start narrow, deploy to a constrained use case, iterate through transcript review, and expand coverage deliberately. The goal is predictable outcomes and stable performance, not flashy demos that fail in production.

Why Use Murf’s AI Voice Agent?

As conversational AI systems mature into orchestrated, voice-enabled infrastructure, the underlying voice layer must meet production-grade requirements. Murf’s AI Voice Agent is designed for this reality.

Core Performance

- Ultra-low latency powered by in-house TTS models like Falcon, delivering total response latency of approximately 900 ms for natural, interruptible conversations.

- Lifelike conversational speech with 99.38% pronunciation accuracy across more than 150 voices.

- Seamless multilingual support across 35+ languages and accents.

Reliability and Control

- Built for cascaded voice architectures that prioritize accuracy and debuggability.

- Smooth warm handoffs to human agents for complex or high-risk interactions.

- Full control over dialing schedules, retry logic, voice selection, and scripts.

- Enterprise-grade security with secure cloud or on-prem deployment, encrypted data handling, and SOC 2 and GDPR compliance.

Murf’s AI Voice Agent aligns with the direction conversational AI is taking in 2026: reliable, orchestrated, multilingual systems that feel natural to users while being engineered with the rigor of serious backend infrastructure.

Conversational AI is transforming how businesses communicate by enabling systems to process human language, generate human language naturally, and deliver appropriate responses across every customer interaction. These systems can answer frequently asked questions, handle complex customer inquiries, and answer questions with greater accuracy while automating routine tasks and improving operational efficiency.

Frequently Asked Questions

What is Conversational AI?

.svg)

Conversational AI is a technology that enables two-way, human-like communication through text or voice between users and machines. It uses NLP, machine learning, deep learning, and generative AI to understand intent, interpret context, and deliver natural, accurate responses. It powers chatbots, voice assistants, and automated agents that handle customer requests, provide support, and personalize interactions at scale.

What is the difference between Conversational AI and Generative AI?

.svg)

Conversational AI focuses on managing dialog, understanding user intent, maintaining context, and producing appropriate, task-oriented responses. Generative AI creates new content such as explanations, summaries, or contextual replies using large foundation models. When combined, they enable more dynamic, context-aware, and human-like conversations.

What is the difference between conversational AI and chatbots?

.svg)

A chatbot is a rule-based system designed to respond to specific user input, typically handling straightforward tasks such as FAQs or appointment bookings. These chatbots operate within predefined scripts and can answer questions only when they match set conditions. In contrast, conversational AI works by understanding intent, interpreting context, and continuously learn from interactions. As a result, it can deliver personalized, adaptive responses and manage more nuanced, human-like conversations. But, modern chatbots integrated with AI are considered a part of conversational AI technology and are more evolved in answer questions in a personalized manner. combining conversational ai / human conversation / artificial intelligence / new generative ai capabilities /

What is NLP in Conversational AI?

.svg)

Conversational AI is widely adopted across:

Customer Service: Automated support, omnichannel assistance, intent-based resolution.

Marketing & Sales: Lead qualification, personalized recommendations, automated follow-ups.

HR & Internal Operations: Onboarding, recruitment automation, IT/HR helpdesk.

Retail: Product discovery, inventory checks, guided shopping, post-purchase support.

Banking & Financial Services: Transactions, fraud alerts, financial advice, account queries.

These industries use conversational AI to reduce costs, increase productivity, and deliver faster, more personalized customer experiences.