Translation and Subtitling Made Simple: Complete Guide for Global Video Reach

Today, people routinely consume content in various formats, and video is chief among them. While these videos reach people all over the world, language barriers can often hinder resonance. That’s where translation and subtitling help.

Translation changes written text from one language to another, while subtitling turns spoken dialogue into words on the screen. You see it everywhere: Netflix shows with translated subtitles, or YouTube videos offering subtitles in different languages.

These tools make it easier for people to watch content, no matter where they’re from or what language they speak.

Translation and subtitling also make videos more inclusive for deaf and hard of hearing viewers, which is essential for reaching broader audiences. and help creators reach new markets across cultures.

Whether it’s a TV series, a brand’s promotional video, or an online course, adding subtitles and translations ensures your original message makes sense in the target language and connects with every target audience. around the world.

In this article, we’ll explore both these concepts in detail and also figure out how AI voice tools, like those created by Murf, can help enhance them in real time .

What Is Translation?

Translation is the process of converting content from one language to another. In simple terms, translation means changing words without losing their original meaning, tone, or emotion. In videos, this can take different forms. Sometimes it’s a voice actor replacing the original text through dubbing. Other times, it’s a narrator speaking over the audio, also known as a voice-over.

For example, when a Spanish film is available in English, a translator has carefully translated every word and phrase so the audience understands not just what’s said, but how it’s said. The goal is to preserve the original message, emotions, and rhythm of the source language.

In today’s translation industry, both human translators and machine translation tools play a role. While technology speeds up the process, human language experts ensure the translation sounds natural and reflects the target culture accurately.

What Is Subtitling?

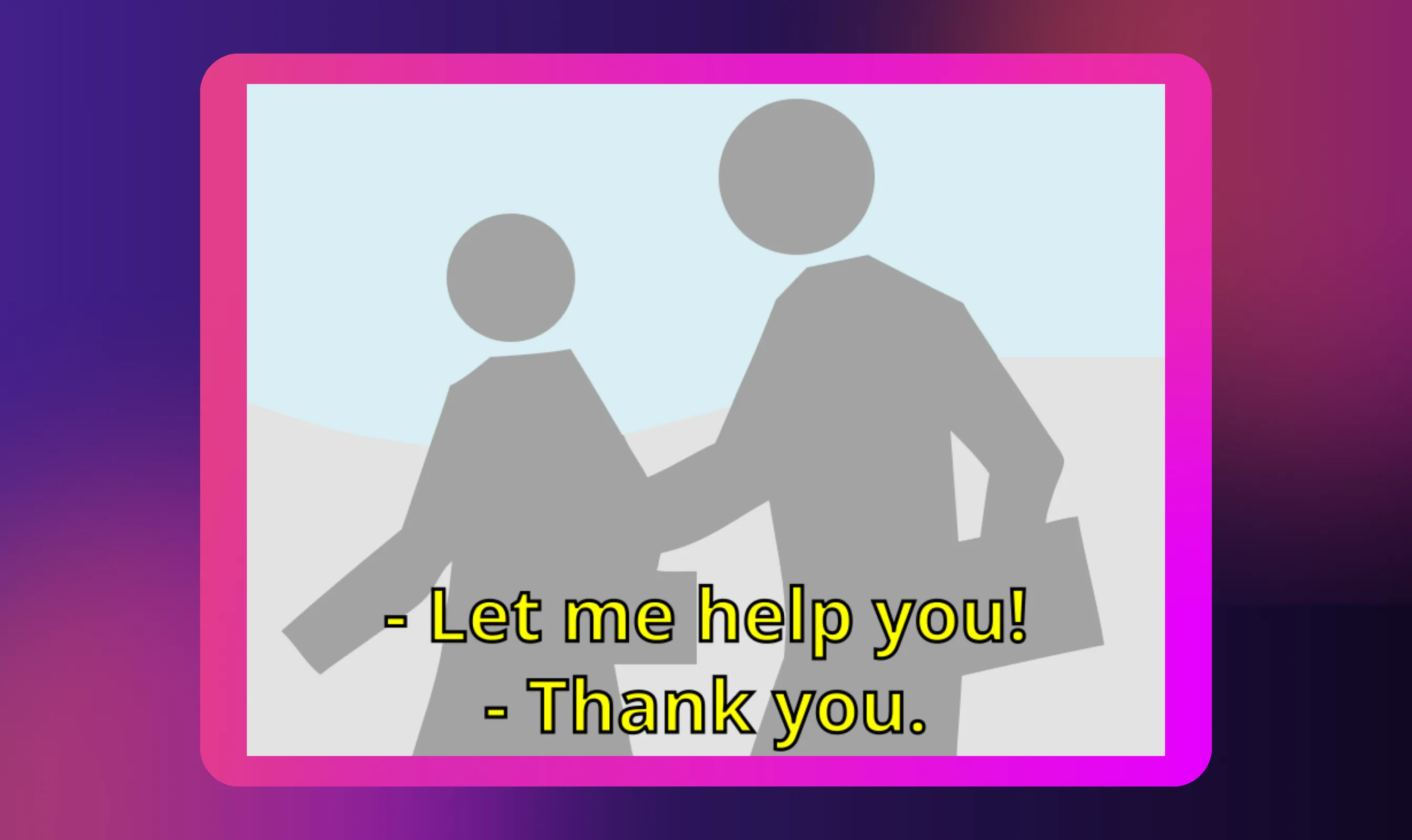

Subtitling is the process of displaying written text on a screen to represent spoken dialogue, narration, or even key sounds. These lines of text appear as subtitles at the bottom of a video, helping viewers follow along as they watch content.

But subtitles aren’t just for translation. They also make videos more inclusive and improve accessibility for deaf and hard-of-hearing viewers. For example, closed captions often include audio cues like “[music playing]” or “[speaker laughs],” giving everyone a complete viewing experience.

Sometimes, subtitles simply show words in the same language as the spoken dialogue to help with clarity. Other times, they’re translated subtitles that carry meaning from one language to another. Think of an English TV series with Spanish subtitle translations, or a company’s global campaign video offering subtitles in different languages for their target audience.

Difference between Translation and Subtitling

Translation and subtitling often go hand in hand, but they’re not the same thing. The main difference lies in their purpose and how they’re applied.

Translation is about transforming spoken dialogue or written text from one language to another, keeping the tone and meaning true to the original message.

Subtitling, on the other hand, is about how that translation appears on screen. It's crucial that it is timed perfectly to the speaker stops and designed to fit within character limits and reading speed.

Here’s how they differ in practice:

- Purpose: Translation focuses on meaning, context, and cultural nuances, while subtitling focuses on visual clarity and timing.

- Format: Translation can take the form of dubbing, voice-over, or written text. Subtitling appears as short two lines of text at the bottom of a video.

- Audience Experience: In translation, audiences hear the content in the target language; in subtitling, viewers read subtitles while hearing the original audio.

- Execution: Translators aim for linguistic and emotional accuracy; subtitles balance timing, style, and accessibility.

- Goal: Both aim to help people from different cultures understand the message, but translation changes the language itself, while subtitling makes that translation readable and synced on screen.

Together, they make videos more inclusive, breaking language barriers and helping creators connect with a global audience.

Why Translation & Subtitling Matter Today

We live in a world where videos speak louder than anything else. But without translation and subtitling, those videos can only go so far. If your content stays in one language, you’re cutting off entire audiences who might love what you create but simply can’t understand it.

Translation and subtitling make your videos global. They help your original message reach different languages and cultures, turning local stories into worldwide experiences. Think about Netflix, which now offers a massive multilingual catalog with translated subtitles and dubbing for nearly every TV series and film. Or YouTube videos with auto-captioning, those subtitles don’t just help viewers, they also improve SEO, keeping your content visible and searchable.

Adding subtitles increases watch time because viewers can follow even in noisy environments or when the audio isn’t clear. They also make your videos accessible to the deaf and hard-of-hearing, promoting true inclusivity.

The rise of global creators, digital classrooms, and remote companies has made translation and subtitling essential tools for growth. With AI-powered translation services like Whisper and DeepL, creators and brands can now translate and subtitle their videos faster and more accurately than ever.

In short, bridging language barriers isn’t optional anymore. It’s how you connect, engage, and stay relevant in a world that never stops speaking.

Key Challenges in Translation + Subtitling

Even though translation and subtitling make videos accessible and engaging across languages, the process is not as simple as it looks. Translators, interpreters, and language experts face several challenges that can affect the accuracy, timing, and emotional impact of a subtitled video.

Timing & Synchronization Constraints

Good subtitling is all about timing. Every subtitle must match the spoken dialogue exactly, appearing when the speaker stops and disappearing before the next line begins. When timing is off, viewers lose focus and the original message feels out of sync.

Modern subtitling translation tools like Subtitle Edit, Aegisub, and Amara help manage timing and synchronization. They allow translators to align subtitles with audio or video frames precisely. A delay of even half a second can break immersion, so fine-tuning the reading speed and display time is critical for a seamless experience.

Condensation & Readability

Unlike written text, subtitles can’t include every word that’s spoken. Viewers have only a few seconds to read, so translators must condense dialogue without losing its meaning.

For instance, a character saying, “I can’t believe you went all the way to Paris just to surprise me,” might become, “You went to Paris to surprise me?” shorter, but the message stays the same.

Best practices for readability include:

- Limiting each subtitle to two lines

- Keeping 35–42 characters per line

- Ensuring comfortable reading speed for audiences of all ages

These small details keep subtitles easy to follow without distracting from the video.

Cultural Idioms, Localization & Context

One of the hardest parts of translation and interpretation is handling cultural nuances. A literal translation may be accurate, but it often misses the emotional tone or humor of the source text.

Take idioms, for example. The English phrase “break a leg” doesn’t make sense in Japanese or Hindi if translated word-for-word. It needs to be localized to convey “good luck” in a way that fits the target culture.

That’s why localization matters. It adapts words, tone, and style so the audience feels the same emotion the original text intended. Great subtitle translations don’t just make sense; they feel natural to people from other cultures.

Quality Assurance & Consistency

Before a subtitled video goes live, it passes through a detailed quality assurance process. This involves proofreading, checking grammar, verifying timing, and ensuring consistency in terminology.

Professional translation services often use subject matter experts to confirm that technical terms are accurate. They also rely on glossaries and translation memories to keep recurring phrases consistent across videos.

Many teams use QA tools or a double-review workflow, where one translator writes and another reviews. This ensures that every subtitle fits the context, matches the audio, and stays faithful to the source language.

File Formats and Technical Constraints

Finally, there’s the technical side of subtitling translation. Different platforms require different subtitle file formats, for example, .SRT, .VTT, .ASS, or TTML. These formats define how subtitles appear, how they sync with audio, and how line breaks are handled.

Using the wrong format or encoding can cause garbled characters or timing errors. Most professionals use UTF-8 encoding to support different languages and special characters. Frame rates, display times, and line breaks must also be adjusted carefully to keep subtitles in sync with the video.

Getting the technical details right ensures that subtitles display correctly across YouTube videos, streaming platforms, and other media players, maintaining the same quality everywhere.

Process for Translation + Subtitling

Here’s how the process of translation and subtitling works in the real world.

Phase 1: Source Subtitles

Everything begins with the raw voice or the unfiltered audio track that captures tone, pauses, laughter, and sighs. The first step is to turn that into precise text. You can do this manually (old-school transcription) or use AI-powered tools that automatically transcribe speech into text. But here’s the catch: if your transcript is wrong, every step after this will be too.

A missing word here or a mistimed sentence there can distort meaning later. That’s why professional workflows use AI tools trained for accuracy, followed by human review to fix names, slang, or regional phrases.

Phase 2: Localization

Translation isn’t just word-swapping; it’s culture-swapping. Line by line, the audio translator adapts tone, idioms, and intent so the new version feels native to its audience. Think of it as rewriting without losing the soul.

Here, memory tools and glossaries help keep terms consistent across episodes or campaigns. But the real magic comes from localization, where the phrase “break a leg” doesn’t become “injure yourself.”

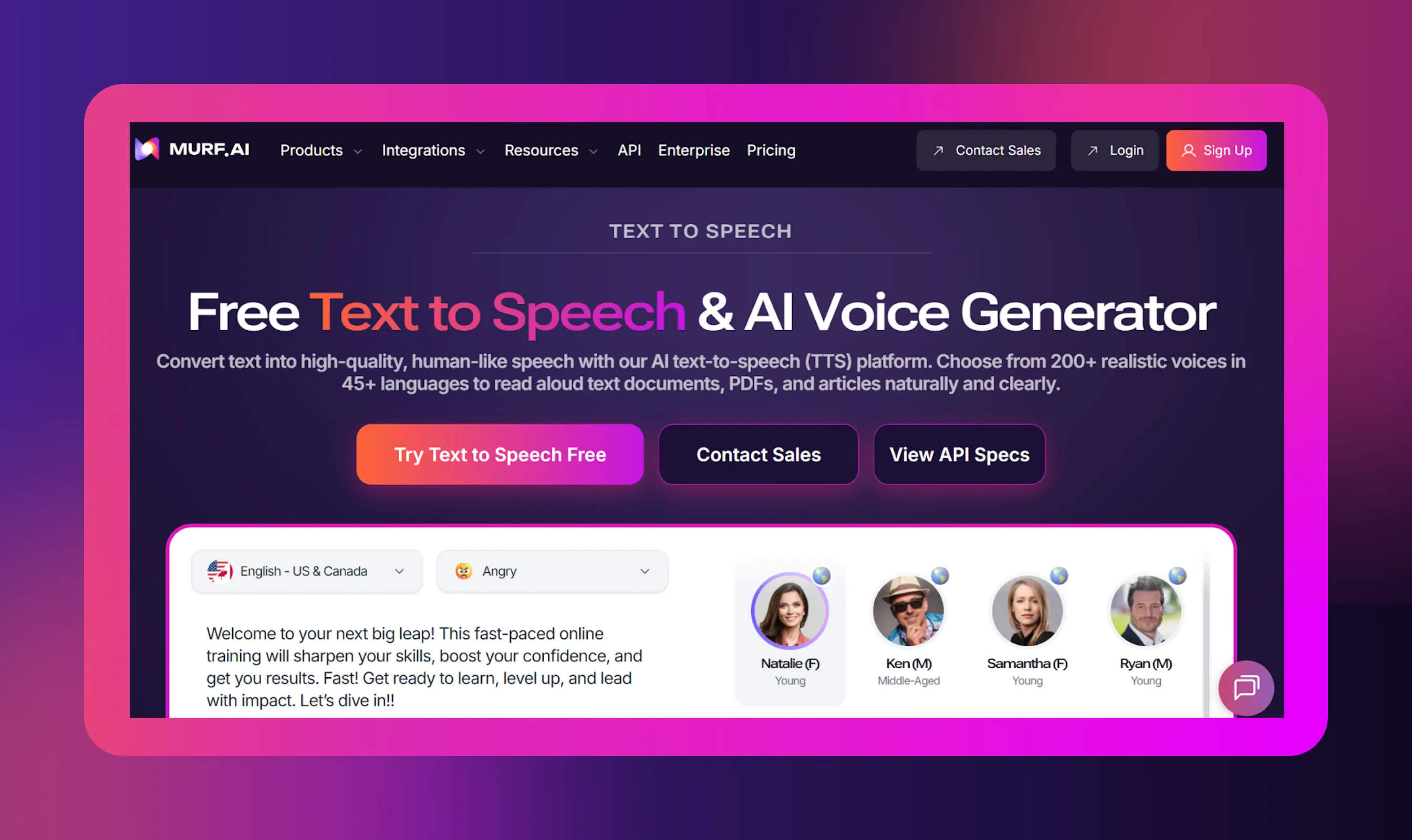

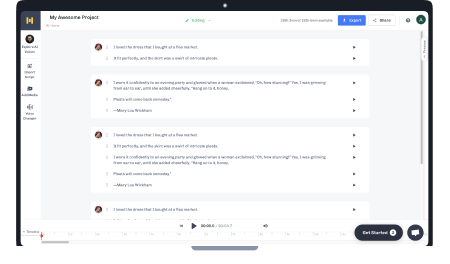

This is where tools like the Murf AI, with its audio and video translation capabilities, shine. Murf's AI Video Translator doesn’t just convert words; they understand pacing, delivery, and context, helping creators scale multilingual versions of their content faster while preserving emotion.

Phase 3: Spotting & Timing

Next comes the art of alignment, i.e., matching every subtitle with the rhythm of speech. Each line must appear and disappear naturally, synced with dialogue but never rushed.

Spotting rules govern the flow of captions :

- A subtitle typically stays for 1 to 6 seconds.

- Each line holds about 35–42 characters max.

- Frame gaps prevent overlap and visual chaos.

- This is where the work starts to feel like music, a dance between text, timing, and tone. Done well, viewers forget they’re even reading.

Phase 4: Insert & Format Subtitles

Once translation and timing are in place, the focus shifts to design. Font, size, and color contrast matter more than people think. White text with a thin black outline remains the gold standard for visibility.

Positioning is key too. Subtitles usually sit bottom-center, but they must avoid covering important visuals or speaker faces. And since audiences watch on phones, TVs, and projectors, formatting should adapt to every screen.

Phase 5: Review & QA/Testing

Every subtitle is reviewed on-screen with the audio. Most reviewers catch awkward breaks, missing punctuation, and emotional mismatches.

Many studios even bring in native speakers for a final pass, ensuring authenticity. Some run focus group reviews to gauge readability and pacing. The goal? To make the subtitles invisible to blend them seamlessly with the story.

Phase 6: Rendering/Exporting & Deployment

Finally, the subtitles are rendered, i.e., exported in the format the platform requires (like .SRT, .VTT, or .ASS). Some creators choose soft-coded subtitles that viewers can toggle on or off. Others hardcode them directly into the video for better control over style and placement.

Before release, everything is tested across devices and streaming platforms (from YouTube to OTT players) to confirm timing, readability, and encoding hold up.

At this stage, what began as raw audio has transformed into a globally accessible experience. The final output is ready to speak to anyone, anywhere, in their own language.

Conclusion

Translation and subtitling aren’t just technical steps, they’re what let stories, brands, and ideas travel. You need accuracy, cultural smarts, and the right mix of tech and human know-how. Every stage, from writing out what’s said to syncing the timing and exporting the final file, shapes how people connect with your content. When you get it right, you reach more people, make your work easier to access, and keep audiences engaged, no matter where they are. If you’re a creator, teacher, or marketer, putting real thought into translation and subtitles turns local content into a global conversation.

Frequently Asked Questions

What’s the difference between translation and subtitling?

.svg)

Translation means turning spoken or written words from one language into another. It covers things like text, voice-overs, or dubbing. Subtitling is about putting that translated text on the screen, timed perfectly so people can read along. Translation captures the meaning; subtitling shapes the experience.

Is transcribing the same as translating?

.svg)

Not at all. Transcribing is just writing down what’s said, word for word, in the same language. Translation takes that text and adapts it into a new language, keeping the tone and context intact.

Which subtitle file formats should I use (SRT, VTT, ASS)?

.svg)

Go with SRT if you want something simple and widely supported YouTube, Vimeo, most video players all handle it. VTT gives you more styling options and interactive features, which works well for the web. ASS is for when you need fancy formatting, like in anime or creative videos.

What is SDH (Subtitles for Deaf & Hard of Hearing) and how is it different?

.svg)

SDH covers more than just dialogue. It includes sound effects and tells you who’s speaking (things like [music playing] or [door creaks] and so on). It’s made for viewers who can’t hear the audio, so they get the full story, not just the words.

How do you make sure subtitles are timed and easy to read?

.svg)

Stick to the basics: let each subtitle stay up long enough so people can read it (about a second for every three words) and don’t let subtitles overlap. Keep lines short, under 42 characters, use fonts with lots of contrast, and always check how they look on different screens. Most important, test your subtitles with real people and tweak them until they feel just right.