Neural Text to Speech: A Complete Guide

Key Takeaways

- Neural text to speech uses advanced AI to create voices that feel human, expressive, and far more natural than older synthetic speech.

- It reduces listening fatigue and improves how people connect with chatbots, IVR systems, and digital assistants.

- Modern NTTS supports emotion, accents, and context awareness, making conversations flow more like real human talk.

- Real-world adoption is growing across content creation, accessibility, customer support, and entertainment.

- Platforms like Murf are pushing the tech forward with features such as voice cloning, detailed voice control, and a high-performance API built for scale.

Neural Text to Speech and Its Rapid Evolution

Neural text to speech is a modern form of speech synthesis that uses artificial intelligence to turn text into lifelike audio. Instead of producing a mechanical, flat output, it generates natural-sounding speech that feels closer to how a real human would talk.

In its early days, TTS systems were limited in their ability to produce expressive and emotionally-rich speech. To generate realistic voices, TTS systems needed to model the complex dynamics of the human vocal system.

The turning point came with deep learning and massive speech datasets. Suddenly, neural TTS systems could learn the patterns that make human speech expressive. They captured everything: the shifts in pitch, variations in tone, and all the subtle quirks that give real voices personality.

As these neural networks evolved, they started incorporating emotion-specific acoustic features directly into the model. This means, the system doesn’t just say the words, but delivers them like a person who’s happy, sad, calm, excited, or even angry.

Further, early neural text to speech models required large amounts of data to train effectively, but newer models have been developed that require less data.

At its core, a neural network learns from massive sets of recorded speech and language data. Once trained, it can create voices that reflect realistic tone, pitch, pacing, and emotional cues.

The result? Audio that sounds like it came from a human speaker, not a computer.

How Neural Text to Speech Works

Traditional TTS systems relied on rule-based techniques and basic concatenation methods. They worked, but the quality was limited. Fortunately, the neural evolution changed the game.

By using machine learning models inspired by the human brain, neural TTS captures complex patterns of human speech including pauses, emphasis, and expressive tones. These models don’t just read words; they understand context, intent, and rhythm, then produce audio that feels natural and engaging.

In simple terms, the neural network analyzes the text, predicts how it should sound, and generates voice output that delivers accuracy and personality. As a result, there's been a massive upgrade in customer experience and content creation workflows.

How Neural Text to Speech Differs from Traditional Text to Speech

Traditional TTS systems use rule-based or statistical models and techniques to synthesize speech from text. These systems typically rely on pre-defined linguistic and acoustic models to generate speech. As such, the output lacks natural prosody, rhythm, and intonation.

Neural text to speech essentially changes that. It uses neural networks trained end to end on massive speech and language data. Instead of following fixed instructions, it learns how real human speech behaves pitch, pacing, intonation, and subtle emotional cues.

Because of this, neural TTS can create voices that feel far more human, while offering flexibility to adjust tone, speaking styles, and expressiveness. Think smoother delivery, richer texture, and a listening experience that users actually want.

Let’s break down what makes the improvement so dramatic.

Prosody Transfer

Traditional TTS divides this job into separate steps with multiple disconnected models. That’s why the final audio often sounds choppy or unnatural.

Neural TTS systems, however, can understand and recreate prosody, including the stress, rhythm, and musicality of speech. They even transfer these features from one voice to another, making it easier to tailor content for specific audiences or brand styles.

This single neural network handles everything together: prosody prediction plus speech synthesis, leading to consistently natural-sounding results across different voices.

Speaker-Adapted Models

Legacy systems need heavy manual work to build a single voice, making customization slow, expensive, and outdated for today’s fast-moving content teams.

But, with deep neural networks, neural TTS can capture the distinct characteristics of a speaker using limited data. That means you can recreate a recognizable voice without months of recording sessions.

Emotional Speaking Styles

Emotional speaking styles add expressiveness and believability to synthesized voices. Traditional TTS systems often struggle to produce emotionally expressive speech and fail to express emotion unless trained with loads of data.

NTTS models, on the other hand, can be trained to swiftly produce audio in different emotional tones, such as happy, sad, or angry. As such, more emotion means better engagement, stronger customer experience, and audio that feels alive.

Key Benefits of Neural Text to Speech

Neural TTS systems offer several benefits, some of which are listed below.

Reduced Fatigue and Smoother Conversations

When people interact with AI phone menus or chat systems, listening to a dull robotic voice can get annoying. By using neural voices in IVR and support workflows, however, users experience far less cognitive fatigue. Neural text to speech enables a more fluent back-and-forth, helping the system understand requests better and respond with more natural sounding speech.

More Natural and Engaging Chatbot Interactions

With neural TTS, chatbots don’t just speak, they connect. The upgraded speech synthesis brings personality into automated experiences, offering natural sounding voices that match tone and intent. As a result, users engage longer, trust the AI speaker more, and are less likely to rage-quit the chat.

Expressive Voices with Emotional Expressiveness

Neural text to speech systems can model expressive acoustic patterns that enhance emotional engagement and the overall user experience. These models can shift tone, pacing, and pitch to reflect how a human would sound when happy, sad, or even angry. This engenders better storytelling, more empathetic digital assistants, and a dramatically improved customer experience.

Real-World Applications of Neural Text to Speech

Here are some of the most common ways NTTS is being used right now.

- Customer support teams use this technology so voice bots sound more helpful and less robotic.

- Accessibility tools turn text into audio with neural text to speech, helping people who prefer listening over reading.

- Creators use it for quick, budget friendly voiceovers in videos, podcasts, and online courses.

- Game studios use neural TTS to give virtual characters believable voices and emotions.

- Global companies rely on NTTS to easily create audio in multiple languages for different markets.

Why is Murf the Best Neural Text to Speech Software?

Several factors offer Murf the edge over other neural TTS software. Here are a few key ones:

Language Options and Natural-Sounding Voices

Having a neural TTS tool with versatile language options is important to reach a wider audience. With multiple dialect options available, users can communicate with a broader range of people and increase the impact of their content. That's why you need Murf, which is packed with 200+ multilingual voices in 35+ languages and 10+ accents.

Murf Speech Gen2: The Next-Level Standard

Some neural TTS tools focus only on making speech sound real. Murf’s Speech Gen2 goes way further. It gives creators the power to shape voiceovers with precise emotional cues, speaking styles, and contextual accuracy.

Built on a proprietary neural network foundation, Gen2 works around complex accents, tones, pacing, and micro-expressions in human speech. It understands how to sound, not just “read the text.”

Creators get unmatched control through:

- Word-level emphasis

- Dynamic tone adjustments

- Accent and pitch variability

- Emotion-aware delivery

Moreover, Gen2 turns workflow chaos into creative clarity. The artificial intelligence takes direction just like a voice talent would, and the output always matches the original intent.

The revolutionary Say It My Way is Murf’s signature feature that mirrors a creator’s own voice patterns. Upload a short sample, and Murf adapts pace, inflection, and structure to produce audio that sounds unmistakably you, but professionally polished.

As neural text to speech technology continues advancing, Murf stays on the frontlines, bringing intelligent automation and creative freedom into everyday content production.

Voice Manipulation

Natural and realistic isn’t always enough. Sometimes the story demands flair. Murf makes it effortless to sculpt voices, which can mean adjusting the tone, pitch, and delivery to nail the vibe. This puts you in charge. Narration can sound smooth one moment, bold the next. A single voice can create entirely different speaking styles without re-recording or juggling multiple artists.

Voice Cloning

Murf lets users create a custom voice and deploy it everywhere. So, you get the same voice on every platform and in every supported language. For companies thinking long term, that’s storytelling power money can’t fake.

Voice Changer

Murf’s neural text to speech Voice Changer converts raw recordings into crisp, studio-grade audio. Adjust the voice gender, refine clarity, and upgrade texture without needing any expensive gear or technical skills.

With more than 200 AI-generated voices to choose from, experimenting becomes part of the creative fun. And yes, creators get unlimited free downloads.

API

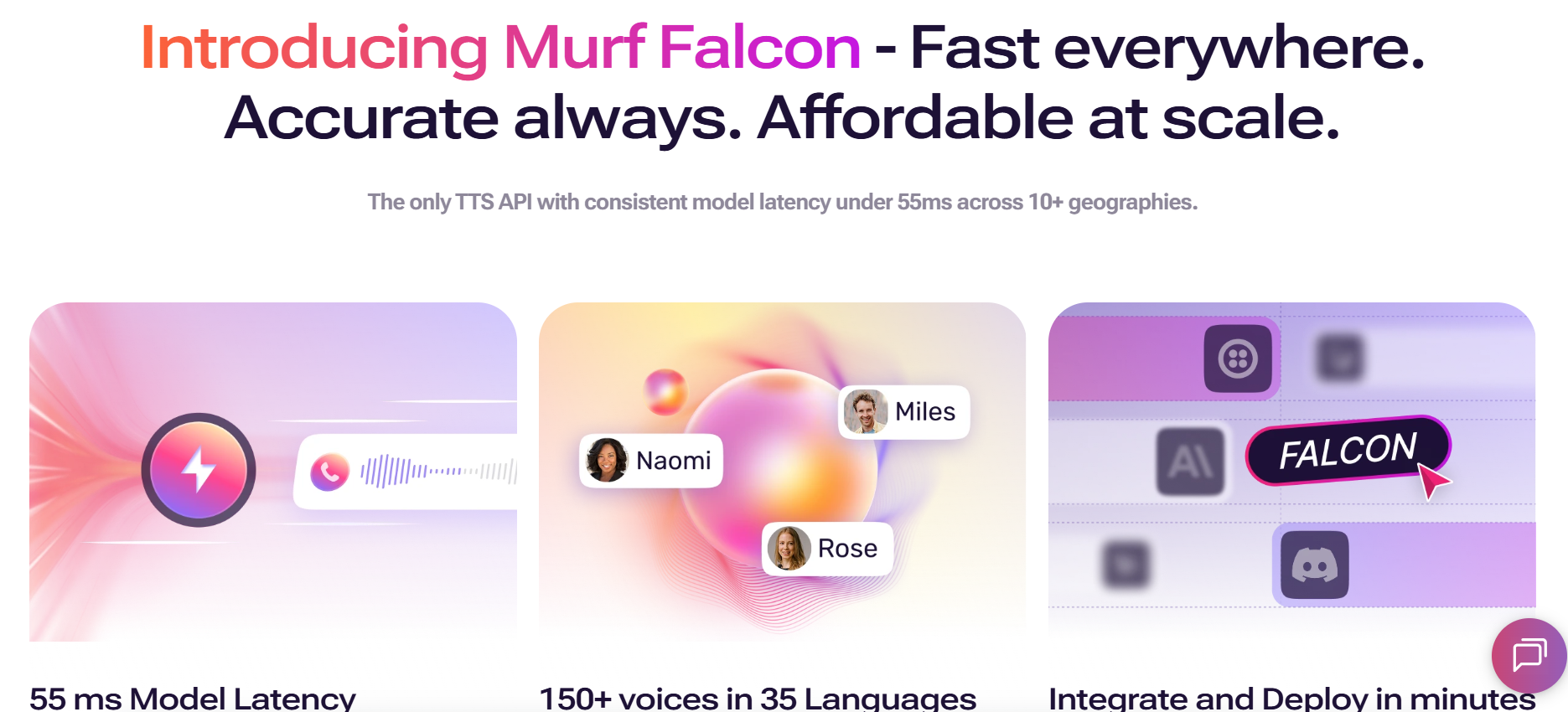

Murf's most recent offering, Falcon marks a huge leap in text to speech (TTS) APIs. It promises:

- Consistent model latency under 55ms

- End-to-end latency around 130ms across 10+ regions

- Ultra-scalable performance up to 10,000 concurrent calls

- Truly multilingual

- As affordable as 1 cent per minute

Falcon unlocks real-time, expressive speech synthesis for teams building next-gen customer experience products: voice agents, call automation, accessibility tools, and more.

With clear documentation, REST APIs, and plug-and-play SDKs, developers can integrate neural text to speech technology in minutes. Murf Falcon doesn’t just support innovation. It accelerates it.

Neural text to speech has unlocked a new era of voice technology, where machines don’t just speak, but sound alive. With smarter neural networks, expressive speaking styles, and personalization at scale, brands can deliver content that feels far more human.

Murf continues to lead this evolution with powerful creative control, advanced customization, and the kind of natural-sounding voices that make storytelling effortless. The future of speech synthesis isn’t robotic at all. It’s emotional, intelligent, and built for creators who want their audio to hit differently.

Frequently Asked Questions

How does neural text to speech differ from traditional text to speech?

.svg)

Neural TTS uses deep learning and neural networks trained on large speech datasets to generate human-like voice output. In contrast, traditional TTS relies on rule-based or statistical methods that often produce flat, robotic speech. Neural TTS captures intonation, rhythm, and prosody, making the voice sound far more natural and expressive.

Can neural TTS handle multiple languages?

.svg)

Absolutely! Platforms like Murf AI support dozens of languages. Murf lists over 35 languages and many regional accents and even multi-native voices that can switch between languages smoothly while preserving natural pronunciation.

What is the difference between standard TTS and neural TTS?

.svg)

Standard TTS converts text to speech using predefined models and rigid rules, so the output often lacks emotion, tone variation, and realistic pacing. Neural TTS, by contrast, learns directly from real human speech data. It can produce speech with realistic timing, emotional tone, and natural variation, which sounds more like how a person would actually speak.

What applications can benefit from neural text to speech?

.svg)

Many. Some use cases include marketing videos, e-learning narration, audiobooks, customer-support chatbots and IVR systems, internal communication tools, and accessibility services. Anywhere you need quality voiceover or interactive voice, neural TTS adds realism and polish.

Can neural TTS be applied to generate voices for virtual characters or avatars?

.svg)

Of course, it can. Because neural TTS can produce expressive, emotional, and realistic voice output, it’s ideal for virtual characters, avatars, or animated content. The flexibility in tone, pitch and pacing helps bring characters to life in a believable way.

How does neural TTS enhance the naturalness of speech?

.svg)

Neural TTS models learn from real recorded speech, thereby capturing nuances like intonation, stress, pauses, and rhythm. That learning enables them to generate voice output that includes natural prosody, emotional cues, and fluent pacing, making synthetic speech sound almost indistinguishable from human speech.

What types of businesses or industries commonly use Neural text to speech?

.svg)

A wide range of businesses use it. This includes media and content creators (videos, podcasts, audiobooks), marketing and advertising agencies, e-learning and education platforms, customer support and telecom (IVR/chatbots), accessibility services, and even gaming or virtual-reality producers. Anywhere that value is in voice, neural TTS delivers.