How Do You Test AI Voice Agents?

.webp)

Testing voice agents is different from testing regular software. For voice agents, there’s no single “correct” input and no predictable, definitive output, because both depend on user behavior. Compared to software testing, which is deterministic in nature, testing AI voice agents is a probabilistic exercise. So the process of evaluating whether the agent did what it was supposed to do is more complex than with traditional software.

3 Ways AI Voice Agent Evals Differ From Software Testing

1. Infinite input-output combinations: Since there is no single or definite input and output, there are potentially infinite use cases. People can ask for the time in any number of ways - “What time is it?” “Tell me the time now.” “Is it 5pm yet?” The answer will depend on the question.

There are many other failure modes in addition to this. For example, the audio may sound distorted, callers may speed talk, or may speak with long pauses.

2. Depends on user goals: The use cases for your voice agent will be specific to your requirements. Say you're testing an e-commerce voice agent. When Sarah asks 'Where's my stuff?', your agent doesn’t understand because it is designed for delivery updates, not casual language. Meanwhile, John gets instant help when he politely requests "delivery status information".

Depending on the input, the same agent can deliver wildly different success rates. So rather than one correct answer, you’re measuring how conversations unfold.

3. Multi-turn, not one time: Since voice agents engage in back-and-forth dialogue, they have to maintain context across multiple LLM turns to complete their tasks. Each exchange could build on information provided earlier, or in the case of an ecommerce agent, it could start with returns, move to product questions and delivery updates, and then come back to returns. Any break in context tracking will create ripples and can trigger a failure in a later turn.

Testing this is a challenge because conversations can take any number of unprecedented directions, and there can be multiple points of failure in one conversation.

The Building Blocks of Testing Voice Agents

Although it might take as much effort as building the agent itself, building a robust and scalable testing system is critical to a successful AI voice agent. The 5 building blocks we've outlined in this section form your testing stack. Under each block, we have talked about testing metrics and also shared examples.

Depending on your use case, success metrics can look different for every voice agent. Probabilistic evals will provide scores for these metrics, which will, in turn, tell you how well your agent has performed and how it is improving.

.webp)

I. Fix an Objective

To define the test metrics for your voice agent, identify what your agent is supposed to do. Are users trying to book appointments? Track packages? Trigger payment reminders? Your metrics flow directly from these real-world goals.

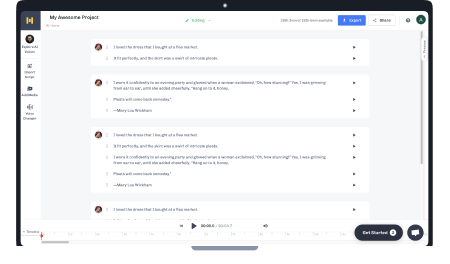

The primary value of a testing system is accessing the data and visualizing it. When investigating the root causes for the errors, you should be able to zoom in and out of the data, like Google Maps. Start with the big picture - the aggregate metrics, like call resolutions or overall satisfaction. Then drill down on the ones that are off. Zoom in again, check transcripts, see the actual conversations that went sideways.

Pro Tip: Your agent won’t hit high accuracy levels for all the use cases from day one. Start with testing for the simple use cases and hand off the others to humans till you’re ready to tackle them.

II. Build Robust Test Cases

This refers to testing the voice agent for expected scenarios - scenarios when the voice agent completes specific tasks without errors.

Examples of testing use cases here include:

- For an appointment bot at a clinic: verifying if it can follow callers’ instructions and schedule medical appointments.

- For a reservations bot at a restaurant: testing how successfully it books tables for customers calling in.

- For an ecommerce bot: check whether it retrieves and provides relevant and accurate information relating to orders and delivery updates.

Creating an initial set of customer scenarios is relatively straightforward. An LLM can generate customer personas, randomly select one, and simulate interactions with the agent. These artificially generated test cases can then be refined through fine-tuning, either based on your understanding of real customers or insights from actual customer calls.During these tests, it’s important to measure how well the agent follows instructions, the reliability of its tool calls, and the overall success rate of the agent in completing its intended tasks.

That said, it’s important to recognize that these are still lab tests. Simulations can never fully replicate real-world scenarios. Customers might actually call from parking lots, mix different languages in the same sentence, or ask questions beyond you and your team’s imagination.Over time, these new and unexpected scenarios that emerge from real-world usage can be added to the test suite. However, the most robust and reliable way to validate performance is by testing against real customer data from production.

III. Limited Production Testing

Limited Production Testing means testing a sample of live calls to get a full picture of how your agent performs.

Depending on the scale and the complexity of the live calls, you can use one, or a combination of methods for production testing: LLM Judges, statistical models and/or human review.

Here’s a short note on each of the three methods:

- LLM Jugdes: LLM Judges are LLMs that evaluate LLM outputs. They score a sample of your voice agent's conversations using your evaluation criteria.

How LLM judges help: When a 10% sample can run into tens of thousands of calls, and manually reviewing each of them can take hundreds of hours.

- Specialized Models: Every model update of the voice agent - from ASR retraining to LLM fine-tuning - can trigger small changes that accumulate into behavioral differences in the system.

How specialized models help: Specialized evaluation models make it possible to objectively measure and benchmark different parts of a voice agent stack. For example, to assess the naturalness of voices, there are commercially available MOS score models that predict how natural a conversation sounds. Similarly, speech recognition accuracy can be evaluated by comparing transcripts generated by a fast production model against slower, more specialized or higher-accuracy speech recognition models.

Gathering model-level accuracy metrics enables voice agent builders to understand which parts of the system are performing well and where targeted improvements and investments are needed.

- Human Reviews: Whether your agent handles 10 calls a day or 10,000, human reviews are essential.

How human reviews help: For edge cases and domains requiring deep expertise.

Each stage of a voice agent requires its own set of tests and evaluation metrics.

.webp)

In FAQ 1, we break down the key evaluation metrics for each stage, with real-world examples.

IV. Strengthen with Human Review

In addition to helping build a scalable and robust agent, human review is invaluable for solving edge use cases and complex scenarios. In particular, SMEs can help in technical topics or subjects that require deep expertise.

Similar to quality control in a factory, humans can judge a sample of the calls the LLM has judged - say 20 out of 100 calls. If the human scores deviate from what the Judge LLM scores, then you have to go back and correct the judge.

Maybe your Judge LLM gave a perfect score to a voice agent that technically answered every question correctly - 10/10. When a human listens to the call they notice that the agent interrupted the customer three times, then started speaking in Spanish when the customer said “gracias” sarcastically. Human score: 6/10. The judge has to be retrained.

As you grow from 100 calls a day to 10,000, human reviews cannot grow 20x. So you should use human input as a precision tool.

V. Iterate & Optimize

Having multiple non-deterministic systems in play can lead to different outputs and also throw up non-human-like errors. So you’re never really done testing.

Instead of looking for perfect scores, track patterns. Refine and optimize the testing blocks iteratively. When observing the agent in production, rather than “did it work or not?” ask “how often does it work, and why did it fail?” Mark the scenarios that failed and the errors encountered. Analyze why it failed by probing till you find the root cause.

To scale testing as the voice agent scales, you need to continuously evaluate and update the scores.

Diagnosing Errors, in 4 steps:

Here’s how you can diagnose the root cause.

- Start broad. Begin your analysis with an aggregate call success metric. This could be call satisfaction rate (CSAT), resolution rate or call handling time. Pull out the calls that scored lower than your internal benchmark.

- Check stage-wise scores for the underperforming calls to pinpoint the stage with the problem. Where did the error(s) occur, exactly? Was it a hearing problem (ASR), voice activity detection issue (VAD), understanding problem (Intelligence), or response problem (TTS)?

- Fix what you found. If you’ve identified when and where the error(s) occurred, fine-tune the specific component to solve the problem.

- Use human review when needed. If the scores don’t reveal any insight, review the calls manually to identify the point(s) of failure.

Pro Tip: How do you know if you’re testing enough?

One way to know this is when your testing no longer reveals new problems or use cases. Since voice agents improve incrementally, you still need to test enough calls to spot small improvements in the conversations. Rather than "did it work or not?" you're asking "how often does it work, and why did it fail?"

AI voice agents have the potential to turn conversations into outcomes at scale if the testing systems that work alongside them are continuously updated, and made robust and consistent.

The Roadmap: Refer the 5 Building Blocks and put your testing stack together piece by piece. Score entire conversations using probabilistic evals. Evaluate performance across each stage - ASR, VAD, speech to text, language understanding and interpretation, and TTS. Strengthen your LLM Judges with human assessments.

The Regular Checks: Test each component along the way. Each part needs to work perfectly for the whole system to succeed. Since the metrics are use-case dependent and non-deterministic, they will have some noise to them. You’ll need to sample all types of production calls and listen to specific conversations to get to the root cause of failures.

The End Game: The goal is to scale evaluations without increasing human intervention for review, while ensuring your voice agent handles all the chaos of human conversations.

Frequently Asked Questions

FAQ 1: What metrics are evaluated across each stage of the voice agent stack?

The metrics that we have shown for each stage below are indicative and not exhaustive - the ones you pick will depend on your use case, and your organization's capabilities and priorities.

Stage 1: Automatic Speech Recognition (ASR) stage

In this stage the speech input is transcribed into a text input.

Evals are usually done by measuring the performance of the speech recognition model against golden utterances.

Key evaluation metrics for Stage 1:

- Word Error Rate (WER): Measures the accuracy of speech recognition based on number of words that were transcribed incorrectly. If this number is high, you can double down to identify the reasons behind transcription errors. Some of the reasons could be because the audio was complex (low volume, background noise, multilingual audio, accents) or the transcription itself might need to be improved.

- Latency: Time taken to generate a transcript following the speech input.

Example :

Customer (with a distinct accent, dog barking in background): "Yeah, I need a cah rental for Satahday"

What the ASR heard: "Yeah, I need a car rental for Saturday" WER is 0%. Great.

Or maybe it heard: "Yeah, I need a cart rental for salad day" WER is 25% (2 errors in 8 words).

Stage 2: Turn detection or Voice Activity Detection (VAD) stage

Turn detection means identifying when the user has finished talking and the LLM is expected to respond.

Key evaluation metrics for Stage 2:

- Turn Taking Accuracy: How often the VAD model predicted the pauses correctly.

- Clipping Rate: Percentage of utterances where the beginning or end is cut off by the voice agent.

Example:

Customer: "I need to change my flight..." [pauses to check calendar]

Agent (jumping in too early): "Sure! What's your confirmation number?"

Customer: "...to next Tuesday instead of Monday."

Agent: "I'm sorry, could you repeat your confirmation number?"

Not the best conversation.

Stage 3: Intelligence stage

The transcribed text is put into context through a prompt, and the LLM interprets and performs the task.

Key evaluation criteria for Stage 3:

- Tool (function) calling accuracy: How effectively the LLM chooses and uses tools, functions or APIs for the given task. Depending on the task, the LLM might also have to call multiple functions in an order.

Example:

Customer says, "Cancel my subscription but keep my account active for archive access." Did the agent call pause_subscription(), which is correct, or call delete_account(), which is disastrous?

- Task Completion Rate: Percentage of user tasks successfully completed without human intervention.

Example:

Password reset request is handled end-to-end without agent handoff

- Latency: Response time, measured from the end of user input to the start of agent audio, and optimized for natural conversation

You can also track responsibility metrics like safety, bias, hallucinations and compliance.

Stage 4: Text to Speech stage

The final step, where the text response is converted to natural sounding speech.

Key evaluation metrics for Stage 4:

- Natural sounding: How closely the speech mimics natural speech and how easily listeners understand the speech. You can track this using metrics like MOS (Mean Opinion Score).

- Pronunciation accuracy: How closely the audio matches the correct and intended pronunciation of the input text, especially names and technical terms. The metrics range from general ones like Word Pronunciation Accuracy (WPA) to domain-specific ones such as Acronym & Abbreviation Handling.

- Latency: The response time, often expressed as Time-to-First-Byte.

FAQ 2: When using LLM-as-a-Judge, do you use different LLMs for the task and the judge, or can they both be the same?

The primary difference between the LLM that is executing the task and the judge LLM is the prompt. Every LLM has biases. Using a different LLM for judging can help address these biases. It is also possible that some LLMs are better at judging than others. You won’t know if this applies to your use case until you try. Even with these evals, you will need humans to assess the judge LLMs because they have blind spots too.

FAQ 3: Should you buy or build?

Before you start coding your own testing framework, check out tools like Coval, and Weights and Biases. These are early days for AI voice agent evals, and these tools are a good starting point.

.webp)

.webp)