Best Open Source Text-to-Speech Software to Look Out For in 2026

Key Takeaways

- Open-source TTS engines offer a cost-effective way to convert text into speech, making them ideal for teams with budget constraints or basic audio needs.

- Four broad categories define today’s open-source TTS market:

general-purpose frameworks, neural network engines, lightweight models, and cloud-connected tools, each suited to specific use cases and technical skill levels. - Despite their strengths, open-source TTS solutions face limitations such as lower voice naturalness, complex setup, hardware dependency, limited multilingual depth, and slower feature evolution.

- For teams prioritizing quality and speed, Murf AI emerges as a strong commercial alternative, offering over 200 voices, 30+ languages, advanced editing, voice cloning, and near real-time API performance.

- Businesses that require professional-grade, scalable, and multilingual audio production will see a stronger ROI with Murf compared to managing open-source pipelines in-house.

Open source text-to-speech software are free and lightweight platforms that convert written text into an audio file. There are several advantages of leveraging an open source text-to-speech engine:

- Cost-effective: An open source engine that converts written text into speech is often free, even for unlimited usage, optimizing expenses for creative workflows.

- Full control and customization: These models can be fine-tuned without any restriction, allowing users to get personalized output for any application.

- Privacy: Open source text-to-speech platforms run locally, ensuring end-to-end data security to uphold stakeholders' interests.

- Flexibility in deployment: These solutions can be run from the command line interface and custom voice assistants in various programming languages seamlessly.

At the same time, there are multiple open-source TTS models that can convert text files into immersive audio messages, making it difficult for teams to select the best one for them.

In this article, let's look at the leading open source engines that specialize in speech synthesis to help businesses make an informed decision when choosing one.

Best Open Source Text-to-Speech Engines in 2026

To simplify the selection process, we've clubbed the tools into four different categories based on their type.

1. Open Source Tools: General-purpose TTS Frameworks

1. Mozilla TTS

Mozilla TTS is an open-source text to real time speech synthesis system developed by the Mozilla Foundation that supports numerous languages to cater to the diverse linguistic needs of users and developers worldwide.

The system is built on deep learning techniques, leveraging neural network models to generate natural-sounding speech.

It allows users to train and fine-tune their models based on specific datasets and requirements. Mozilla TTS benefits from contributions and feedback from a community of developers and researchers.

The speech recognition solution represents Mozilla’s commitment to promoting open-source, privacy-aware technologies in the realm of speech recognition and synthesis.

2. Coqui TTS

Coqui is an entirely free text-to-speech library that offers vocoder and pre-trained TTS models as part of its package. While the foundation model XTTS developed by Coqui’s team generates voices in 13 different languages, XTTSv2 comes with 16 languages and enhanced performance.

The speech synthesis platform excels in fast and efficient model training backed by detailed training logs, support for multi-speaker TTS, and a feature-complete Trainer API through an easy-to-use interface.

Coqui has emerged as a solution for businesses seeking a natural-sounding human speech engine for diverse applications like voice assistants, automated customer service, and speech-enabled digital devices.

3. Festival

Festival is an open-source TTS framework known for its flexibility and support for customizable voices. It offers multilingual synthesis and allows users to modify pronunciation, intonation, and voice parameters. Its modular architecture makes it a solid choice for research and academic projects.

Best suited for educational tools, research applications, and embedded systems requiring detailed voice customization, Festival is ideal for generating synthetic speech in different languages and accents.

Real-life uses include language-learning apps, speech research (such as sentiment analysis), and custom voice assistants that need tailored voice outputs.

4. eSpeak NG

eSpeak is a free, compact, open-source speech synthesis platform that converts text into voice files using a formant synthesis method. It supports over 100 languages and accents through optional data packs. The platform offers multiple voice files while allowing alterations within defined limits.

It produces voice output in the form of WAV files and is partially compatible with a customizable HTML interface and SSML. eSpeak can translate text into phoneme codes, making it adaptable to other speech synthesis engines.

Furthermore, as the model is written in the C programming language, it can be run from the command line, making it a great development tool for creating and refining phoneme data.

5. Mary TTS

MaryTTS stands out as an open-source, multilingual speech synthesis system developed in Java. It allows users to access, modify, and distribute the source code under the LGPLv3 license. The tool supports many other languages and dialects while offering customizable voices and pronunciation rules.

Due to its Java-based design, MaryTTS can operate on various platforms like Windows, Linux, and macOS. It is extensible, as users can incorporate new voices, languages, and functionalities through plugins and modules.

The open-source model attracts developers seeking customization, researchers exploring text-to-speech algorithms, and individuals in search of a free, open-source speech recognition solution for non-commercial purposes.

6. NVDA: Optimal Spoken Words Conversion

NVDA (NonVisual Desktop Access) is a free, open-source screen reader for Windows designed to assist visually impaired users. It offers support for several other languages, customizable voices, and works seamlessly with TTS engines like eSpeak NG and Microsoft Speech API.

Ideal for accessibility solutions, NVDA enables users to navigate digital content independently. It’s commonly used for tasks like web browsing, reading documents, and navigating software, making it invaluable for students, working professionals, and organizations committed to digital accessibility.

2. Open Source Tools: Neural-Network-Based TTS

- Tacotron 2 is a synthesizer that generates mel-spectrograms from text, often used with a vocoder to produce speech.

- Glow TTS is a flow-based model that synthesizes speech quickly using normalizing flows to generate waveforms directly from text.

- WaveNet is a vocoder that converts spectrograms into high-quality, natural-sounding waveforms.

- VITS is an end-to-end model that directly generates speech from text by combining synthesis and vocoding processes.

- ESPNet is a toolkit for developing end-to-end speech processing models, including text-to-speech systems.

3. Open Source Tools: Lightweight TTS

- Pyttsx3 is an offline synthesizer that uses text-to-speech engines to generate speech within Python applications.

- RHVoice is a multilingual synthesizer designed for lightweight, high-quality speech synthesis across various platforms.

- Piper is a fast, efficient synthesizer that offers lightweight, neural network-based text-to-speech synthesis optimized for edge devices.

- MBROLA is a diphones-based synthesizer that produces speech using pre-recorded phoneme databases, known for its simplicity and low resource usage.

4. Open Source Tools: Cloud-Connected TTS Options

- Mycroft Mimic: A flexible, open-source TTS engine that supports offline use and offers voice customization. It works well with Mycroft artificial intelligence voice assistants and supports various default models like Mimic 1 (based on Festival) and Mimic 2 (based on Tacotron). However, it requires technical knowledge to set up and optimize.

- Voxygen TTS: A Google cloud-based service known for producing high-quality, natural voices in multiple languages. It is often used in commercial and defense applications. While it provides high performance, it comes with licensing fees and limited flexibility for open-source projects, and if high transcription accuracy is required.

- YakiToMe: A simple, web-based TTS tool that can convert text, PDFs, and Word documents into speech. It offers features like email delivery of audio files and a choice of voices, including some from Microsoft and AT&T. However, its interface is dated, and the platform is not suitable for advanced or large-scale use in available models, producing high-quality results.

Limitations of Open Source Text-to-Speech Solutions

1. Poorer Voice Quality Compared to Proprietary Systems

Industry-leading TTS platforms, like Murf, beat their open-source TTS counterparts in terms of naturalness, prosody, emotional range, pacing, and consistency. As a result, the audio generated through the commercial platforms is much more engaging for target audiences across niches.

2. Requires Technical Expertise for Setup

Professionals working with free and open source models will need to set up environments (Python, CUDA, dependencies), allocate GPUs, understand model architectures, and troubleshoot any errors along the way.

On top of that, if they wish to fine-tune the text-to-speech open-source model, they will need to dive into the hyperparameters of the tool. While it is possible to do it all through community support, the adoption rate is largely dependent on one's technical expertise.

3. Performance Depends on Local Hardware

Any open-source TTS engine will run on your local device. Hence, its performance will be highly dependent on the CPU and GPU of your computer. This can be challenging for business teams who want to synthesize speech from text quickly.

Additionally, if the chosen tool is an AI model, it can slow down other applications due to higher computing needs, affecting overall productivity.

4. Limited Multilingual Support

Global communication teams need a TTS engine that can produce engaging voices in several languages accurately. Text-to-voice open-source platforms usually offer limited languages and even fewer voice and accent customization options.

Commercial TTS solutions, on the other hand, enable voice generation in dozens of languages in hundreds of styles and accents.

5. Fewer Upgrades Down the Line

Open-source text-to-speech models lack a professional development team that is responsible for their growth over the years. Of course, many more developers are contributing to such projects these days, but these improvements are minor and sporadic.

This trait makes them useful for teams with limited TTS requirements. However, as the needs grow, whether it is in terms of diversity or volume, professionals will get significantly better results with commercial TTS engines.

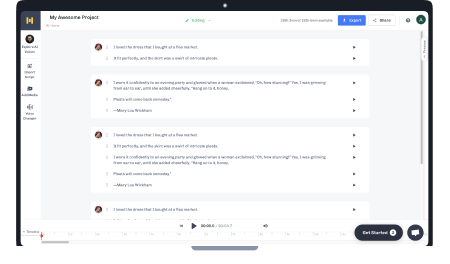

Murf: The Best Alternative to Open-Source TTS Engines

Murf AI stands out as a powerful commercial alternative to the open-source engines discussed earlier. The TTS solution delivers realistic, human-like voiceovers with minimal effort — making it ideal for content creators, marketers, educators, or anyone producing voice-enabled assets at scale.

Users can look forward to:

- Over 200 AI-generated voices across 30+ languages and numerous accents. This variety gives you flexibility to match tone, language, or regional nuances.

- Rapid adoption rate. The intuitive platform is cloud-based, meaning everyone, including non-technical users, can hit the ground running within minutes.

- Advanced customization capabilities to tweak speech parameters like pitch, speed, emphasis, pauses, and tone.

- Do more than basic TTS, such as dubbing, voice cloning, and multilingual voiceovers, to create a range of content for a variety of audience segments.

- Robust and powerful TTS API, known as Murf Falcon, that has ultra-low latency (~55ms) and the fastest time-to-first-voice (~130ms), making it suitable for real-time applications.

Ready to level up your TTS workflows?

Sign up for Murf today and enjoy free ten voice generation minutes.

Frequently Asked Questions

How does open-source TTS benefit developers?

.svg)

Open-source TTS benefits developers by offering flexibility, customizability, and cost-effectiveness. Developers can modify the source code to fit their specific requirements, contribute to the community, and integrate TTS capabilities into their applications without the constraints of licensing fees.

Is open-source TTS compatible with various platforms?

.svg)

Yes, many open-source TTS solutions are designed to be integrated across various operating systems and devices. This flexibility ensures developers can deploy applications with TTS capabilities on desktop, web, and mobile platforms.

Are there limitations on the languages supported by open-source text to speech?

.svg)

While open-source TTS projects offer support for multiple languages, the quality and extent of support can vary significantly between languages. Popular languages like English, Spanish, and Mandarin often have better support and higher-quality voices, while less commonly spoken languages might have limited or lower-quality options.

Can open-source TTS be integrated into mobile apps?

.svg)

Yes, open-source TTS can be integrated into mobile apps. Many open-source projects provide APIs or SDKs that facilitate the incorporation of TTS functionality into Android and iOS applications.