How Is Voice Cloning Used by Cybercriminals? Exploring the Risks

Voice cloning technology is evolving at a rapid pace, and so are the tactics of those who exploit it, presenting new cyber threats. Cybercriminals are now using artificial intelligence (AI) voice cloning to execute scams and cheat people of their money.

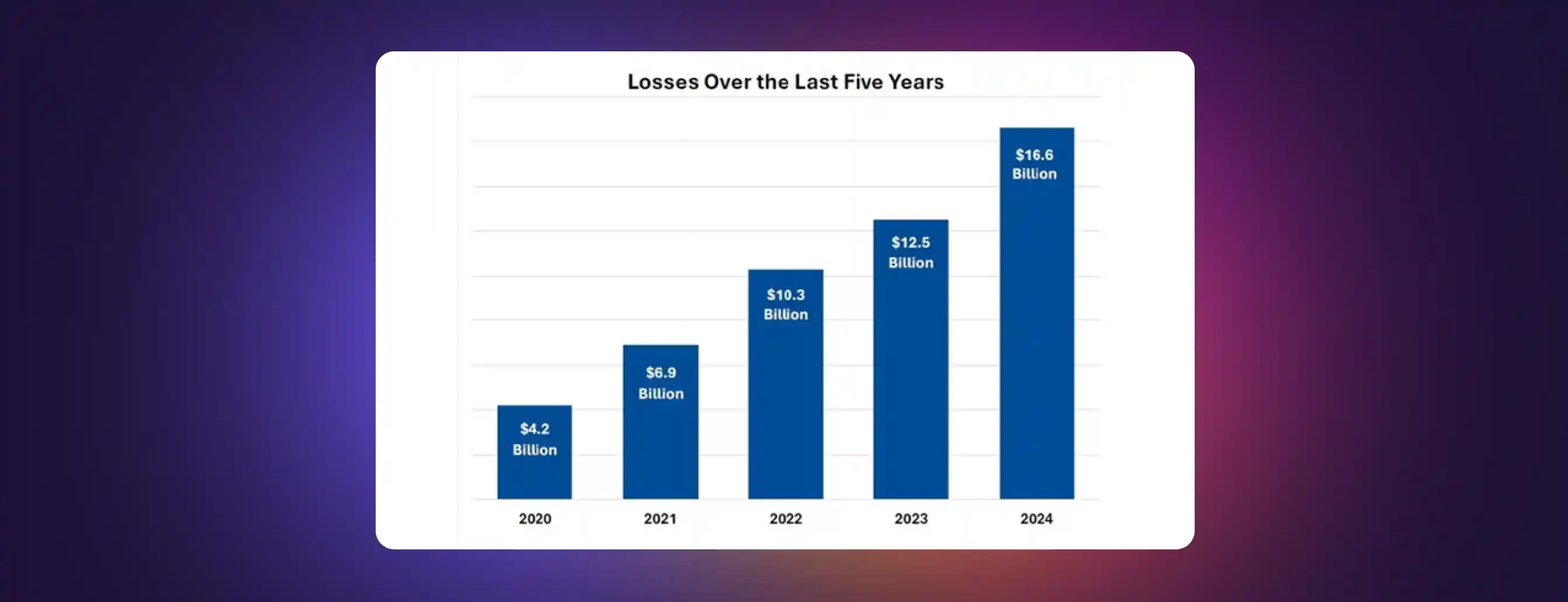

According to the FBI’s Internet Crime Complaint Center (IC3), 2024 witnessed a significant increase in losses, reaching $16.6 billion, compared to $4.2 billion in 2020. All of these crimes involved voice phishing (vishing) scams and cloned voices, leading to significant financial fraud .

Perpetrators of such crimes pose as a family member or an executive of a well-known company. This is done to gain the victim’s trust and trick them into sending money or revealing sensitive information.

All these criminals need is a small sample of the person’s voice. This can be easily accessed from social media or voice recordings. Once they have the sample, AI voice cloning tools are used to generate audio that sounds almost like the original voice.

Voice cloning attacks are typically launched to gain unauthorized access to sensitive data, initiate money transfers, and spread false information. But there’s a way to fight back! Powerful technology like AI voice detectors, alongside advancements in deepfake technology, can intercept these cloned voice attempts by analyzing audio patterns that human ears might miss.

In this article, we’ll learn about how cybercriminals misuse voice cloning technology, how you can safeguard yourself from it, and the role AI voice detectors play in preventing such incidents.

AI Voice Cloning and Vishing

As the name suggests, AI voice cloning is creating a digital replica of a person’s voice using artificial intelligence and machine learning. Even a small audio file from a social media post or voicemail can be used by AI voice cloning tools to recreate the original’s speaker’s tone, speech patterns, and emotions with astonishing accuracy.

Let’s consider vishing, or voice phishing next. Here, cybercriminals call victims and ask them to disclose their confidential or financial data, like bank account details or login credentials. AI voice cloning technology can make these attacks more dangerous and enhance social engineering tactics because it is nearly impossible to distinguish the real voice from the fake one.

How does AI voice cloning work? When a cybercriminal calls you, you won’t hear an unknown caller, but someone you know, like your boss, your friend, or a family member in need of urgent help.

This is where voice cloning scams get real. Threat actors can trick people into sending money or making financial transactions by using a cloned voice. They don’t need technical skills; just access to AI tools and a convincing script.

And with voice recognition failing to detect fake sounds, these scams are becoming harder to identify. It’s one of the scariest ways malicious actors are weaponizing an emerging technology to launch vicious attacks on unsuspecting victims.

How Is Voice Cloning Used by Cybercriminals

By leveraging artificial intelligence, voice cloning technology is fast becoming a favorite tool in the hands of cybercriminals. The scam usually follows a pattern:

- Create a sense of urgency

- Sound trustworthy

- Ask for money through wire transfers, gift cards, or even crypto

- What’s truly shocking is the way the entire plot is set up. First, attackers get their hands on voice recordings from social media platforms like YouTube, TikTok, or Instagram. Then, they use AI voice cloning tools, which are widely available, to generate a cloned voice that’s eerily similar to a real person. Last but not least, they contact the victim via phone calls, often with a spoofed caller ID to seem trustworthy, and say whatever it takes to swindle them.

Voice cloning attacks are a real threat and happening all over the world, making it clear that nearly anyone can be targeted.

- Scammers cloned the voice of a company director in the UAE and pulled off a $51 million heist.

- A Mumbai-based businessman reportedly received a call from what he thought was the Indian Embassy in Dubai. Guess what? It wasn’t.

- In Australia, threat actors used a fake voice of Queensland Premier Steven Miles to push a bogus Bitcoin investment scheme.

And if you thought kids were safe from this, think again! In a chilling case from the US, a teenager’s voice was cloned and used in a fake kidnapping call. Terrified, her parents almost caved in to the scammer’s demands.

It was in 2023 that the potential of voice cloning technology for cyberattacks became clearer. In a personal experiment, Wall Street journalist Joanna Stern could successfully access her bank account using a recording of her own cloned voice. While her action posed no risk, it became an example of the potential misuse of voice cloning technology.

In fact, an investigation by McAfee Labs highlighted how easy it can be to pull off these new methods. Researchers found over a dozen free, accessible, and highly effective AI voice cloning tools online in just two weeks. What’s more, because cloning a voice boils down to using a simple text input, even those with zero technical skills could use them.

In one case, they cloned a voice with 85% accuracy with a three-second audio sample. But they brought it up to 95% with more data and fine-tuning. The tools could mimic accents from the US, UK, India, and Australia.

Through these findings, researchers warned that AI is simplifying the task of creating digital replicas of voices. Today, nearly anyone with access to AI tools can create malware, execute voice cloning scams, or carry out social engineering attacks without having any technical skills.

For better or worse, people are waking up to this reality and questioning what they hear and see online. A study found that 32% of adults now trust social media a lot less, largely due to deepfake voice technology, cloning scams, and the overall flood of false information caused by generative AI.

How to Safeguard Against Voice Cloning Scams

As voice cloning technology grows more advanced, so do the scams. Addressing modern cybersecurity concerns, including deepfake technology, means moving beyond taking caution. It's about taking clear action. Here’s how individuals and organizations alike can protect themselves from voice cloning scams and related cybersecurity concerns.

Activate Multi-factor Authentication (MFA)

Stay vigilant and always implement multi factor authentication, especially for tasks involving financial transactions or sensitive data. Don’t make the mistake of relying on voice recognition alone. If someone gives instructions over a call, it helps to have a second layer of verification. For example, biometrics verification, like fingerprints, is much harder to fake than cloned voices. Most phones support this, so be sure to use it to stay protected.

Educate Employees and Yourself

Insider threats and employee errors are real. These can be prevented by training employees in how vishing works and targets victims. Regular employee training sessions on the latest scam tactics, evolving capabilities of AI voice cloning, and emerging social engineering trends can help people identify signs of trouble and flag them before irreparable damage is done. Staying abreast of evolving cybercrimes is key to thwarting these attacks.

Implement Stringent Protocols

Organizations and individuals should formulate specific and clear security rules when it comes to money transfers and data sharing. No matter how familiar the voice sounds, nobody should skip the protocol. Make it a practice to assume that every unexpected request is malicious until verified.

Verify with Real People

If you get a suspicious call from someone you “know,” don’t start giving away information immediately. Test the waters by hanging up and calling the person directly using a trusted number. When calling a family member urgently for emergencies, set up a codeword system. In case of pretentious calls, the codeword can serve as a quick way to confirm the authenticity of the caller.

Data Encryption is Non-Negotiable

Basic cybersecurity hygiene demands the usage of strong encryption for files in transit and at rest. While encryption won’t stop someone from cloning your voice or accessing your voice recordings , it can protect your files if a scammer hacks their way into your system.

Limit Online Sharing

Oversharing creates opportunities for threat actors to build an audio profile and gain unauthorized access. The more you post, the easier it gets for malicious actors on the dark web to find voice samples. Avoid putting phone numbers, voicemail greetings, and long videos online if they’re not necessary.

Use the Latest Detection Tools

As deepfake voice technology grows, so do the tools that help you prevent misuse. However, the same AI that creates cloned voices can also be used to detect them. Thanks to this, the best AI voice detection tools are evolving quickly. Keep an eye open for the latest development in this regard and use them to avoid facing the potential consequences of being a victim.

Murf: The AI Voice Cloning Tool You Can Count On

In the midst of unending vishing attempts and cyberattacks, Murf AI stands its ground as the best voice cloning software you can rely on. Murf isn’t just another voice cloning tool, it’s built around ethical artificial intelligence that uses only top‑tier machine learning algorithms to generate realistic cloned voices with responsibility.

With Murf, custom voice models come from professional voice actors and only get created with express permission. This way, users own their voice's digital replica and control who uses it.

Every clone captures emotions, like anger, happiness, sadness, as well as the various nuances like intonation. It also learns the unique speech patterns, making them ideal for different use cases like training, narration, podcasts, and more.

Here’s the cherry on the cake: Murf’s policies forbid illicit or misleading use of its technologies. Our platform ensures traceability, transparency, and security, where users decide who can access and deploy their voices. All in all, Murf AI is not just about creating voices, but about using them responsibly.

Voice Cloning Attack on the Rise? ➝ Learn How to Protect Yourself Today!

Frequently Asked Questions

What are the dangers of voice cloning?

.svg)

Voice cloning technology can be misused by cybercriminals to impersonate others, launch vishing attacks, steal sensitive information, or manipulate human emotions. This can lead to financial fraud and cybersecurity breaches.

What is AI deepfake voice?

.svg)

AI deepfake voice refers to a cloned voice generated using artificial intelligence and machine learning. It is often used to mimic a person’s voice for malicious purposes like scams or deception.

What’s the best voice cloning scam prevention tip?

.svg)

Always implement multi factor authentication and verify requests through a separate channel. Don’t trust instructions based only on a familiar-sounding voice because threat actors can easily fake it.

How to detect voice cloning scams?

.svg)

Watch for unusual phrasing, poor timing, or emotional pressure. Use AI voice detectors and train staff to recognize signs of voice cloning scams and vishing attacks.

Does Murf AI provide ethical voice cloning?

.svg)

Yes, our AI models and voice data are securely encrypted. Your data is stored on AWS, which is compliant with PCI-DSS, SOC 1/2/3, FedRAMP, GDPR, HIPAA/HITECH, and more. Data transfers use HTTPS with SHA-2 compliant encryption and TLS for end-to-end security. All these steps restrict misuse, prioritize transparency, and help users stay protected from synthetic voice abuse and threats.