The Evolution of Text to Speech Model

Key Takeaways

- TTS has moved far beyond the old robotic voice and now delivers speech that feels smoother, clearer, and closer to real human conversation.

- Neural models and deeper training data have pushed voice quality forward in a big way, especially in terms of tone, pacing, and clarity.

- Education, healthcare, accessibility tools, and customer service see the biggest real-world benefits from these improvements.

- The next phase of TTS will focus heavily on personalization, letting users shape voices to match how they prefer information delivered.

- Emotional intelligence in TTS is becoming a priority, helping models respond with tone that fits the moment instead of sounding flat.

- As the tech matures, TTS will feel less like a feature and more like a natural layer of how we interact with digital content.

- In short, TTS is shifting from “reading text aloud” to offering genuinely supportive, human-centered communication.

Text to Speech isn’t considered a niche tech feature anymore. It’s woven into the technology we use every single day. This includes phones reading out messages while we’re on the move, smart speakers calling out reminders, or our browser narrating an article when our eyes need a break.

By turning written words into natural-sounding speech, TTS makes our devices come a little more alive. It feels like interacting with people rather than machines. Also, it’s quicker, easier, and makes tech feel more like an assistant that actually gets you.

This shift isn’t just convenient, it’s massive. The global TTS market was valued at roughly USD 4.0 billion in 2024 and is forecast to grow to around USD 7.6 billion by 2029, at a compound annual growth rate (CAGR) of 13.7%.

This growth is being fuelled by increasing demand as more industries adopt voice-based interaction, accessibility tools, and AI-powered voice features.

AI technologies like advanced machine learning and natural language processing play a crucial role in enhancing the quality of synthesized speech. These techniques are instrumental in training a text to speech model that lays the foundations for TTS technology.

Let's explore the various types of TTS models and the leading brands that are shaping the future of this technology.

Understanding the Different Types of Text to Speech Models

The different types of text-to-speech models that have evolved to meet diverse needs and applications. Here’s how the major TTS models stack up and where they’re used in the real world.

1. Concatenative Synthesis Models

Concatenative Synthesis Models represent a traditional approach in the realm of text to speech (TTS) technology. These models are built on huge libraries of pre-recorded human speech. Every tiny unit, including phonemes, syllables, and words, lives inside a database. When you enter text, the system handpicks the right pieces and clubs them together into a full sentence.

Because the audio comes from real human recordings, the voice quality is often clean and recognizable. The downside is flexibility though. You can only say what’s already been recorded, and the files take up enormous storage.

Despite being a legacy method, it still works well for applications where consistency matters more than expressive emotion. Think automated announcements, basic IVR systems, and older navigation devices.

2. Parametric Synthesis Models

Parametric Synthesis Models offer a versatile approach to text to speech (TTS) technology. They basically help fix the limitations of concatenative systems.

Instead of putting pre-recorded audio together, they generate speech using parameters like pitch, duration, and intonation. You type the text in, the model converts it into phonetic units, and then it reshapes those units using mathematical rules to sound like speech.

It’s fast, it uses less memory, and it can switch between voices or languages without needing massive datasets. The tradeoff, however? The speech can sound robotic if not tuned well.

These models became popular in GPS devices because they could dynamically generate spoken instructions on the fly. They also show up in lightweight voice assistants and multilingual tools where storage and speed are bigger priorities than warmth or personality.

3. Neural Network-Based Models

Neural Network-Based Models use statistical methods and machine learning to produce speech that mimics human intonation. They leverage extensive collections of speech samples to teach neural networks how to generate speech that imitates human intonations and rhythms.

Instead of using pre-recorded clips or synthetic voice sounds, these models predicts what human speech should sound like for any given text input. The result is smoother flow, more natural intonation, and better support for different accents and styles.

Models like Tacotron and Tacotron 2 paved the way here, becoming the backbone of many modern voice assistants and customer service bots. They handle everything from casual reading to expressive dialogue in audiobooks and videos.

4. End-to-End Deep Learning Models

End-to-end deep Learning Models are at the forefront of TTS technology. Instead of breaking text down into phonemes or relying on multiple processing stages, they turn raw text into speech in one continuous pipeline.

These systems are trained on massive spoken language datasets. Over time, they learn not just pronunciation, but emotion, cadence, and context. That’s why newer TTS voices feel more realistic, able to sound warm, urgent, calm, or conversational depending on the input.

They’re also getting better at things like:

- adapting to a user’s speaking style

- generating expressive voices for character dialogue

- supporting mixed-language sentences

- cloning voices with just a few minutes of audio (when allowed ethically)

The weak spots? They can still fumble rare accents, slang, or oddly structured sentences if the training data doesn’t cover them well.

These models now power cutting-edge applications like AI voice assistants, real-time narration tools, and extremely natural voiceovers for videos and learning content.

Brands Leading in Text to Speech Technology

Several leading companies have developed their own text to speech (TTS) models, each utilizing different technologies to meet specific user needs.

Here’s a brief overview of the best text to speech models worth exploring in 2025:

Murf AI

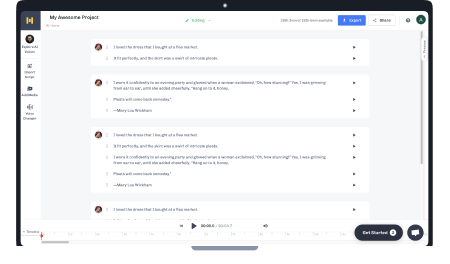

Murf AI uses cutting-edge neural network-based TTS models. It now offers two distinct, production-ready TTS products:

- Murf Speech Gen2: This is Murf’s most realistic, studio-quality neural voice model. Trained on 70,000+ hours of ethically sourced audio, Gen2 delivers native 44.1 kHz output and emphasizes natural rhythm, emotion, and high pronunciation accuracy for long-form voiceovers, e-learning, dubbing, and marketing content. Gen2 supports rich customization (voice styles, prosody, pauses) so creators can generate multiple expressive versions of the same line.

- Murf Falcon (TTS API): Falcon is built for real-time and large-scale conversational use. It’s an ultra-fast streaming model with predictable time-to-first-audio under 130 ms, supports high concurrency at edge scale, and is optimized for low-latency voice agents and IVR systems. Falcon and Gen2 both offer complete control over pitch, speed, prosody, and pronunciation. Further, the API supports REST and WebSocket integration and returns word-level timestamps for tight audio-text sync.

Overall, Gen2 is most suitable for studio-grade content (ads, audiobooks, video dubbing), and Falcon for real-time conversational agents, large-scale IVR, and multilingual voice pipelines.

Google TTS

Google utilizes both Tacotron and WaveNet models to power its text to speech technology. Tacotron transforms written text into mel-spectrogram representations for speech synthesis, effectively capturing the nuances of human speech, such as intonation and rhythm.

WaveNet, a deep neural network, generates highly natural and expressive speech from these spectrograms. It uses a revolutionary approach to produce one audio sample at a time, which allows it to emulate the intricacies of the human voice with unprecedented accuracy.

Together, they create a seamless user experience, particularly in applications like Google Assistant and Maps, where clear, human-like speech is essential.

Amazon Polly

Amazon Polly offers two main TTS models: neural TTS for high-quality, lifelike speech with adaptive intonation and standard TTS for a more basic but cost-efficient solution.

- Neural TTS uses deep learning to generate fluid, expressive voices for customer-facing experiences.

- Standard TTS relies on more traditional synthesis techniques, offering a cost-efficient, slightly more robotic output.

It’s widely used in notifications, IVR flows, chatbots, and digital content where large volumes of speech need to be generated reliably, for example in customer service applications .

Microsoft Azure TTS

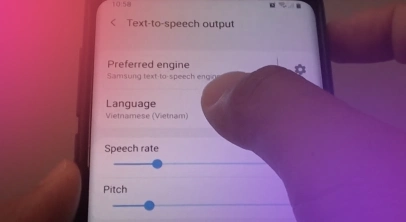

Microsoft Azure's Neural network-based technology leverages deep neural networks to convert text into lifelike spoken audio. It supports a wide range of languages and dialects, including English, Mandarin, Spanish, French, and Arabic. This capability is crucial for applications that require inclusivity and accessibility worldwide.

Thanks to its strong customization features, users can adjust pitch, pacing, and speaking style or create custom voice models, as needed. Because it scales well globally, Azure often powers e-learning tools, audiobooks, healthcare assistants, and enterprise chatbot platforms.

It also enhances customer service by improving the naturalness of responses in AI-driven chatbots and virtual assistants. This versatility and adaptability make Azure's TTS a powerful tool for creating accessible and engaging user experiences in multiple languages.

IBM Watson Text to Speech

IBM Watson focuses strongly on enterprise environments where precision and terminology accuracy matter. Its neural TTS system delivers steady, clear speech even with domain-specific language.

It shows up in IT support systems, legal tech, fintech platforms, and automotive interfaces where clarity is essential for technical or instructional content.

OpenAI Whisper

Trained on 680,000 hours of diverse, multilingual data, Whisper is particularly adept at handling a variety of speech patterns, accents, background noises, and domain-specific language.

Whisper’s applications are vast, including enhancing accessibility by providing real-time captions for the deaf and hard-of-hearing community, automating transcription for media and legal sectors, and creating voice-enabled interfaces for apps and devices.

Additionally, its open-source nature encourages innovation, enabling developers to integrate voice-to-text capabilities into their applications or experiment with further advancements in speech recognition technology

Companies such as Snap, Quizlet, and Truvideo are utilizing OpenAI's Whisper API to leverage its speech recognition capabilities across various industries.

Future of Text to Speech Models

Ongoing advancements in artificial intelligence and machine learning are set to drive significant progress in the future of text to speech (TTS) technology. These are a few trends that influence this landscape:

Advancements in Neural Networks

Modern neural networks are about to go far beyond “read-this-text-back-to-me" feature.

- More natural output: Deep learning models trained on massive, diverse datasets will start catching the tiny quirks in human delivery. Rhythm. Breath patterns. Emotional shading. Instead of flat audio that merely sounds correct, we’ll get speech that understands context and reacts to it.

- Smarter, context-aware models: Architectures built with attention mechanisms and prosody prediction will handle tone the way a real speaker does. If the text is urgent, the model speeds up. If it’s sentimental, it softens.

- Adaptive personalization: Building on frameworks like GPT-style transformers and Tacotron, future systems will tweak themselves to match your accent, pacing, and preferred delivery. The more you use them, the more they mirror you.

Enhanced Personalization and Naturalness

Personalization is becoming the baseline in TTS tools.

- Hyper-detailed voice controls: Users will adjust pitch, speed, timbre, even micro-intonations to craft voices that feel tailor-made for specific projects.

- Emotionally expressive models: With WaveNet, Tacotron, and their successors, TTS won’t just “read” emotions but perform them. Expect richer highs, softer lows, and more lifelike transitions.

- Feedback-driven evolution: As users interact with TTS systems, the models will update in real time. If you keep slowing the voice down, it’ll learn. If you favor a certain emotional style, it’ll adapt.

This kind of responsiveness changes how TTS is leveraged across industries:

- Education: Apps can adjust narration speed and complexity based on how quickly a learner absorbs the material.

- Healthcare: Patients can receive clear, personalized, multilingual explanations that meet them at their level of understanding.

- Customer service: AI can detect frustration or confusion and shift tone to match the moment. Conversations become smoother, calmer, more human.

Overall, future TTS models will feel less like tools and more like digital voices with an actual presence. They’ll listen, adjust, and carry emotional intelligence that turns synthetic speech into something that sounds more and more human.

Wrapping Up

Text to speech has gradually grown from a stiff, robotic narrator into something that sounds far closer to a real voice you’d actually want to listen to. Better neural models, smarter training methods, and richer datasets have all nudged the voice synthesis technology into a space where it can help people understand, learn, and stay connected.

You can see that shift everywhere, from students using audio lessons and patients receiving clearer health instructions to users who rely on accessible content every day.

The next wave of TTS is all about nuance. Voices that shift tone naturally, systems that adjust pacing based on context, and models that adapt to personal preferences. As these tools mature, they’ll feel less like software and more like a familiar voice guiding you through whatever you need to get done.

Embracing these innovations, Murf AI continues to lead with cutting-edge TTS solutions, ensuring that every voice interaction is compelling and authentic. Whether you’re looking to elevate your content, streamline business communication, or create more engaging experiences, Murf AI offers the tools to bring your voice projects to life.

Sign up on Murf and take your voice projects to the next level today!

Frequently Asked Questions

What are text to speech models?

.svg)

Text to speech (TTS) models convert written text into spoken words using computer algorithms. They are designed to enhance accessibility and improve user experience across various digital platforms by generating audible speech.

How do text to speech models work?

.svg)

TTS models work by analyzing input text, breaking it down into smaller components like phonemes, and then generating speech based on those segments using either pre-recorded human voices or synthesized speech algorithms. Modern models, such as neural networks, are trained to produce highly natural and smooth-sounding speech.

Which TTS model is best for natural-sounding voices?

.svg)

Neural Network-Based Models and End-to-End Deep Learning Models are considered the best for natural-sounding voices. They use advanced machine learning techniques to closely mimic human speech patterns, intonation, and expression, resulting in more realistic voice output.

How are TTS models trained and evaluated?

.svg)

TTS models are trained using large datasets of human speech and text, allowing them to learn language patterns and speech characteristics. They are evaluated based on their ability to produce clear, natural, and contextually appropriate speech, often measured through user feedback and quality assessments like intelligibility and fluency tests.