Future of AI in Speech Recognition

"Alexa, play some music."

We are all familiar with this command. Amazon's Alexa works on speech recognition augmented with artificial intelligence that lets this voice-based conversational AI listen to human speech, put it into context, understand the intent, and deliver accurate results within seconds.

Speech recognition has grown exponentially in the past five years. In fact, reports suggest that it is estimated to grow at a compounded annual growth rate of 23.7% by 2029. The figures are huge, to say the least, and span far into the future.

So, what exactly does the future hold for AI speech recognition? How will AI help enhance speech recognition?

These are the questions that this blog will attempt to explore while shedding light on where this technology will lead in the future.

Role of AI and Natural Language Understanding in Voice Recognition

AI plays a pivotal role in speech recognition software. Think of it this way: how good would Alexa (or Siri) be if it could not understand the command you gave it?

AI equips conversational AI software with the necessary algorithms and technologies to respond instantly to voice commands accurately. Diving into a little more detail, speech recognition AI assists the software in integrating the syntax, grammar, composition, and structure of voice command (audio signals) that breaks the speech down into "understandable" components.

Some conversational AI software also comes equipped with machine learning technologies which enable them to learn as they go. The more speech recognition tasks the software completes, the more accurate and relevant the outputs become.

The most transformative role of AI in conversational AI is that organizations can propose voice command software to adapt it for internal use. They can train the AI and natural language understanding model to smoothen internal workflows and assist processes using simple voice commands.

Needless to say, AI makes tasks simpler, intuitive, and more accurate—the same applies to speech to text AI. Businesses can design or adapt conversational AI technologies for various use cases.

Use Cases of AI Speech Recognition

There are several revolutionary use cases of conversational AI across multiple industries that have made the lives of the end-users easier.

Voice Assistants

Much like Alexa and Siri, there are countless voice assistants in the market today. In fact, Juniper Research predicts that by the end of 2024, the number of voice assistants in the world will have overtaken the human population—reaching a figure of 8.4 billion!

As AI in speech recognition continues to improve the performance of voice assistants, more and more users will find them as a convenient way to browse the internet or to issue simple commands (like, "Alexa, add eggs to the shopping cart.”)

Customer Satisfaction and Self-Service

AI voice recognition enhances the capabilities of customer service conversational AI channels. They give exceptional customer service by providing context-driven, dynamic menus in IVRs. Business phone systems today employ intelligent IVRs that work on voice commands.

These self-service channels for phone calls are more accurate because they save customers the time they would otherwise waste navigating a lengthy menu or getting to customer service agents. They can say the right voice command to the AI IVR on the phone and resolve the query quickly and accurately.

Translation Services Of Conversational AI

Translation between multiple languages has become easy with automatic speech recognition. You can use speech recognition software to record your speech in one language and regenerate the same speech in a different language.

The AI algorithms in such software record your speech and derive a written version. The algorithm then maps this script to the corresponding phonemes of the second language, generating the translated spoken version of the same speech within seconds.

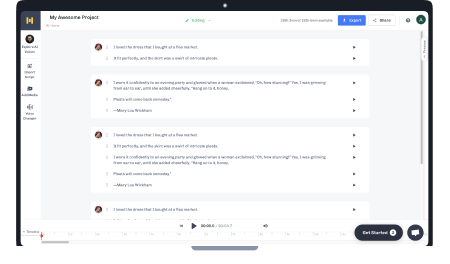

Transcription

One of the most tedious and repetitive tasks businesses deal with is generating transcriptions of audio or video meetings.

Thankfully, with the help of conversational AI software, it is possible to automatically generate transcriptions of whatever is spoken over a communication channel.

All you need to do is connect the conversational AI software to the communication channel and enable the transcription option. Quite a few software also allow you to clean these transcriptions of customer calls of fluff and generate minutes of meetings.

Assistive Technology

One of the noblest use cases of these speech recognition systems is in assistive technologies. For example, for a person with mobility-related problems, conversational AI software can help them browse the internet or type out documents using dictation tools.

It can also help them complete tasks through IoT-enabled smart home devices, like remotely operating the gadgets and appliances installed in the home with voice commands. This technology makes the life of physically challenged people easier and more navigable.

How Will AI Help Improve Speech Recognition Models?

It can be observed from the performance of the latest speech recognition applications that their performance and accuracy are far better than what the world was used to about a year ago. AI is a dynamic technology that builds on the input data and continuously improves itself to produce more relevant results, close to human answers to user queries. This makes conversational AI a progressive technology that will only get better as it goes.

With a few tweaks in the algorithm here and there, significant improvement and new functionality can be derived from speech recognition software.

Listed below are five key ways AI can improve the operational efficiency of implementing conversational AI software and technologies in the future.

Better Accuracy

"I'm sorry, I didn't understand that."

These sentences by voice assistants would begin to reduce as AI makes voice assistants smarter and quicker. With an expansive set of semantics and natural language processing technologies, voice assistance and conversational AI software would become more accurate in understanding voice commands and generating the right outputs.

Improvement in AI algorithms would help these applications better understand and attribute human speech.

Accent Identification

If you ask somebody why they are frustrated with voice assistants, they would probably say, "It doesn't understand what I say." There are countless languages worldwide, and an undeniable element of accent when people of different cultures and languages try to use a speech recognition tool natively designed in another language.

With AI and its improved performance, conversational AI tools would be able to break down the accent barrier and truly deliver an exemplary customer satisfaction experience.

Machine-Human Interactions

AI can improve the quality of interactions between machines and humans. Think Iron Man's Jarvis—while that is still quite a few years away, AI can get speech recognition polished to that level.

With a better understanding of the nuances and subtleties of human speech, machines can be made to understand not just the spoken words but the implied meaning to a very limited extent. For example, machines can be programmed to understand the meaning behind popular idioms.

Personalization

AI has long been known to enhance customization and personalization in consumer-centric channels for better customer service for a business. The same can improve the performance of conversational AI applications.

AI can enable voice assistants to provide proactive, personalized service recommendations for users to enhance their experience. This can be done by drawing from the historical patterns and past conversations the user demonstrated while giving that specific command.

A good example is Alexa recommending items for the shopping cart by understanding how frequently the user orders a specific product (like coffee beans, toilet paper, shoes, and more).

Understanding Speech Complexities

Highly complex and specific AI algorithms can enable speech recognition software to break down human speech literally and for its nuances. While there is still time to get AI to understand the implied meaning of human speech, AI can still perform sentiment analysis and generate the output accordingly.

To Conclude

AI augments speech recognition technology, making it more effective and utilitarian. The future holds tremendous promise for combining these two technologies, with drastic improvements in how they process human speech and generate responses. This is especially true for businesses seeking to increase customer satisfaction.

Frequently Asked Questions

What is the role of AI in Speech Recognition?

.svg)

AI enables conversational AI software to be smarter, more accurate, more relevant, and quicker when responding to user queries or performing other tasks. This technology gives depth and quality to speech recognition software.

How is NLP used in speech recognition?

.svg)

Natural language understanding and processing focuses on the interactions between software and humans through speech and converted text. NLP enables conversational AI to understand the context behind spoken words and attribute those words with familiar counterparts in the dictionary.

For example, using NLP and machine learning, the software can understand the query, whether the user asks how hot it is outside or whether they ask what is the temperature outside.

Which technology is used in speech recognition?

.svg)

Technologies like AI, machine learning, neural networks, deep learning, and natural language generation and processing are used in conversational AI. Each of these technologies help make the software better in specific ways.

Which AI tool converts speech to text?

.svg)

There are several tools that leverage the power of artificial intelligence to convert speech to text. Murf is one of the best options available today to convert speech to text using modern AI technologies.