Everything You Need to Know About Deepfake Voice

Imagine being able to hear the voice of your favorite celebrity or historical figure, even if they passed away years ago. Thanks to the advancements in deep and neural learning technology, this is all now a possibility. Deepfake voice is becoming an increasingly popular tool in the world of media and entertainment. The technology makes it possible to create realistic computer-generated voices that appear to be coming from real people. Keep reading to learn more about what deepfake voices are, why they are concerning, and how to use them ethically.

What is a Deepfake Voice?

Deepfake technology uses artificial intelligence to create synthetic media, including fake audio that appears totally authentic. This is done by producing artificial sounds that mimic human voices through deep and natural learning algorithms.

The term "Deepfake" was coined by Ian Goodfellow, director of machine learning at Apple Special Projects Group, in 2014. It combines the words "deep learning" and "fake," referring to the technology used to manipulate audio clips or video recordings. Generative adversarial algorithms are often used to create deepfakes, with the intelligence models competing to produce the most convincing clone by learning from their mistakes.

Initially designed to provide innovative text to speech tools with human-like voices, neural networks are now being trained on speech data sets to create lifelike voices from only a few minutes of audio content. Deepfakes can be used to create voice assistants, audiobook narrations, and video voiceovers, improve customer experience, personalize communication, and provide accessibility for individuals with disabilities.

However, this technology poses ethical concerns, such as the potential spread of fake news and the use of impersonation for fraudulent or criminal purposes.

Deepfake vs. Synthetic Voices: What's the Difference?

Deepfake and synthetic voices are two AI techniques used to generate artificial voices from a real person's voice. Deepfake voices are created by training machine learning models on large amounts of audio data to mimic a specific speaker. It is usually produced by manipulating existing footage or recordings of a person's face and what they're speaking.

In contrast, synthetic voices are created by leveraging TTS algorithms to generate new speech that sounds like a real human. Synthetic voices are indistinguishable from natural human voice recordings, and the technology is often used in voice assistants, audiobooks, and other applications.

While both raise ethical concerns regarding potential misuse, such as impersonation or manipulation of audio recordings, the choice between synthetic and deepfake depends on the user's specific use case and resources. Deepfake voice technology is often associated with fraudulent or malicious activities, while synthetic voice technology is primarily used for practical purposes, such as accessibility and convenience.

Use Case of Deepfake Voice for Individuals and Businesses

From entertainment to healthcare to customer service, deepfake voices offer a variety of use cases for both individuals and businesses. Let's explore some of the key applications of such voices in these contexts.

For Individuals

People with speech disorders may find it challenging to communicate even a simple thought or idea effectively, affecting their quality of life. However, deepfake technology can offer a promising solution to those with conditions such as Parkinson's disease, cancer, and multiple sclerosis, which take away the ability to talk and communicate. It can transform a patient's tone to sound more natural and accurate. Veritone Voice is a leading deepfake voice generator that provides tailored solutions for individuals based on their specific needs.

For Businesses

From creating brand mascots to providing a variety of content like weather and sports reports, artificial intelligence has opened the doors to a variety of opportunities.

- Films and TV Industry: Deepfakes can be used to dub over an actor's voice in post-production, which is especially useful when the actor is unavailable or has passed away.

- Animation Industry: Deepfake technology allows animators to give unique and distinct tones to their characters, regardless of their vocal range or languages.

- Game Development Industry: Deepfakes have become popular for creating characters that sound like their in-game counterparts, with realistic and dynamic dialogues that match their personalities.

- Cross-Language Localisation: Deepfake voice technology enables people to speak other languages in their own voice, opening up new possibilities for this application in various industries.

- Dubbing Process: Dubbing is made more agile with deepfakes, enabling a user to dub over dialogues in multiple languages without extensive changes to the original recording or new voiceover actors.

Ethical Concerns Related to Deepfake Voices

Along with many exciting opportunities, deepfake voices have also raised several ethical concerns. The potential misuse of deepfakes for spreading disinformation or manipulating public opinion has sparked debates on the ethical use of this technology. Let's see some of the potential negative consequences of deepfake voices.

- Misinformation and Deception: Deepfakes can be used to spread false information or create confusion by impersonating someone else, leading to damage to the reputation and public trust in individuals or organizations.

- Fraud: Criminals can use deepfake or cloned voices to impersonate others, such as bank officials or government officials, to extract sensitive information or commit fraud.

- Privacy and Consent: Deepfakes can be created from a small audio recording, which can be obtained without consent or knowledge, violating privacy rights. Using these voices for entertainment or commercial purposes without an individual's consent can also be a violation of their rights.

- Manipulation: Deepfakes can be used to create fake recordings of socially marginalized communities, promoting harmful stereotypes and discrimination. It also enables the creation of fake news or propaganda to influence public opinion.

- Lack of Regulation: Currently, there are no laws regulating the use of deepfakes, which can lead to misuse or abuse.

Ethical Principles to Follow When Using Deepfakes

Following ethical principles when using deepfakes is essential for protecting individuals and organizations, promoting trust in the technology, and avoiding legal and reputational consequences.

Some common ethical principles that should be followed when using deepfake voices include:

- Obtaining Consent: Formal permission should be obtained from the voice owner before voice cloning. Public APIs should be avoided as they can result in a lack of control over who is using voice cloning technology and for what purpose.

- Background Check: Deepfake voice cloning service providers should only work with clients who have a good reputation and adhere to stringent ethical norms.

- Labeling: Any deepfake voice or synthetic content should be clearly labeled as such to avoid confusion and fraud.

- Avoiding Harmful Stereotypes: It is critical to avoid producing voice cloning that promotes regressive stereotypes and disrupts social order.

Top Deepfake Voice and Synthetic Voice Generation Software

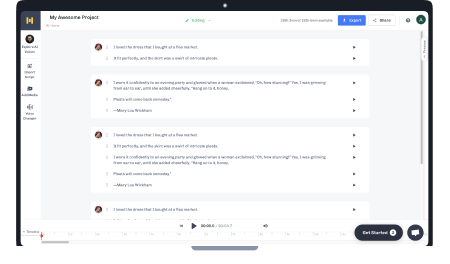

Murf AI

Murf Studio is a versatile AI voice generator software that enables content creators to produce studio-quality voiceovers for various use cases at minimum time and cost. Murf offers 200+ AI voices in over 20 different languages that users can utilize to create superior audio content in minutes. The company also offers advanced features such as a voice changer to convert an existing recording to a professional-grade voiceover by editing out any unwanted noise, modifying errors in voiceover, and removing filler words.

That is not all. With Murf, users can also customize their voiceover by changing the pitch, speed, and emphasis, as well as sync the generated speech with an existing video or background music, all under one platform.

Resemble AI

Resemble AI specializes in AI voices and voice cloning, with features like Resemble Localize for creating multilingual voices, Resemble Style for applying one voice's intonation and speaking style to another, Resemble fill for creating programmatic sounds, and an Interactive API to enable programmers to easily generate instant audio with SSML.

The company offers voice modulation technology to produce deepfakes for creating natural-sounding speech in films, animations, AI voice assistants, and personalized ads. Resemble Clone can morph your voice to narrate, sing, give dramatic performances, and even speak other languages by cloning any voice with as little as three minutes of data.

The enhanced AI models can be built within 12 minutes of data submission, providing users with high-quality, domain-specific AI voices.

Descript

Descript is an all-in-one editor that uses AI to simplify the process of media editing. Its features include fast and accurate transcription, automatic speaker detection, filler word and silence gap removal, multi-track editing, live collaboration, and auto-captioning.

Descript uses NLP for ASR processes, providing optimum voice accuracy; and Lyrebird AI for voice cloning and artificial voice synthesis. Its 'Overdub' feature allows users to clone their voice and add audio recordings to an existing project without any re-recording. The tool analyzes and mines audio samples to recreate the intricacies of a person's voice. The deepfake voice generated can be used for podcasting, voiceovers, video game production, and more.

Respeecher

Respeecher is a voiceover software that uses artificial intelligence to replicate a person's voice by evaluating their speech patterns and vocal features. Respeecher utilizes language-agnostic technology for multilingual recordings, providing a flexible and adaptable solution for high-quality voice content creation.

The software also has a feature that allows you to translate speech in numerous languages while keeping the speaker's vocal characteristics. Respeecher can be used to create voice assistants, audiobooks, and virtual avatars.

iSpeech

iSpeech is a cloud-based text to speech tool that converts written text into a natural-sounding voice in over 30 languages. The software offers various voices and dialects and allows users to customize the AI speech, tone, and pace of the generated voice to their specific requirements.

The software can be used to create voice-enabled applications, e-learning modules, training data, and automated customer care solutions.

Key Takeaways

With advances in AI algorithms, it is likely that deepfakes will become more sophisticated and life-like over time. Deepfakes have the potential to greatly enhance our lives and commercial prospects, but only with careful use and responsible development. By staying aware of potential risks in voice cloning and investing in robust detection and prevention technologies, we can ensure that the benefits of deepfakes far outweigh the risks.

Frequently Asked Questions

Is it illegal to use deepfake voices?

.svg)

The legality of using deepfakes depends on the jurisdiction that the company falls under and the intended use. The unauthorized use and distribution of deepfakes may violate privacy or intellectual property laws.

What are the concerns associated with deepfake voices?

.svg)

Deepfakes raise concerns regarding potential malicious use for impersonation, manipulation, and spreading fake news. There are also concerns about privacy, consent, and their impact on trust and credibility.

How can we use deepfakes ethically?

.svg)

Ethical use of deepfakes requires obtaining consent from the voice owner and disclosing the use of the technology to relevant parties. It's also crucial to use them responsibly and avoid any use that may cause harm or deceive others. Ethical use demands proper attribution and transparency.

![Top 8 AI Celebrity Voice Generators in 2025 [Updated List!]](https://cdn.prod.website-files.com/675151245f2993547dbd5046/67f515a46c9af147b0cef7dc_celebrity%20AI%20voice%20generator.webp)