LiveKit and Murf

This is an official integration of Murf for LiveKit Agents, a framework for building voice and multimodal conversational AI applications. You can install the Python package livekit-murf to use Murf as a TTS service in your LiveKit Agents, providing high-quality voice synthesis with real-time streaming capabilities.

What is livekit-murf?

livekit-murf is the official Python package that integrates Murf’s high-quality text-to-speech (TTS) capabilities with LiveKit Agents. This integration enables you to add natural-sounding voice synthesis to your LiveKit-powered conversational AI applications, supporting real-time streaming and low-latency audio generation.

Installation

You can install the Murf TTS integration for LiveKit Agents using several methods:

Using pip

The recommended way to install the package is using pip:

Using uv

If you’re using uv as your Python package manager:

From source

To install from source, clone the repository and install it in development mode:

For Existing LiveKit Projects

If you already have a LiveKit project, you can quickly integrate Murf TTS by simply initializing the murf.TTS() class in your existing AgentSession. Make sure you have your Murf API key configured in your environment variables. You can get your Murf API key from the Murf API Dashboard:

View all configuration parameters →

Guide to Building Voice Agents with Murf and LiveKit

This guide provides setup instructions and examples for building your first LiveKit Agent with Murf TTS.

Setup & Requirements

Before running the examples above, ensure you have everything configured properly:

Requirements

- Python >= 3.9

- livekit-agents >= 1.3.5

Required Packages

The examples in this guide use the Murf TTS integration along with specific LiveKit plugins for speech-to-text (Deepgram), language models (OpenAI), and voice activity detection (Silero). Install all packages used in the examples:

API Keys

You’ll need API keys for the services used in your LiveKit Agent:

- Murf API Key: Sign up at the Murf API Dashboard and generate your API key

- LiveKit API Credentials: Get your LiveKit server URL, API key, and secret from LiveKit Cloud.

- Additional Services: Depending on your setup, you may need API keys for STT (e.g., Deepgram) and LLM (e.g., OpenAI) services

Environment Variables

To keep your API keys secure, it’s recommended to use environment variables. Create a .env file in your project root:

Then load these variables in your Python code using python-dotenv:

Quick Start Example

Here’s a simple example of how to create a LiveKit Agent Worker with Murf TTS:

Save this code as agent.py and run it with:

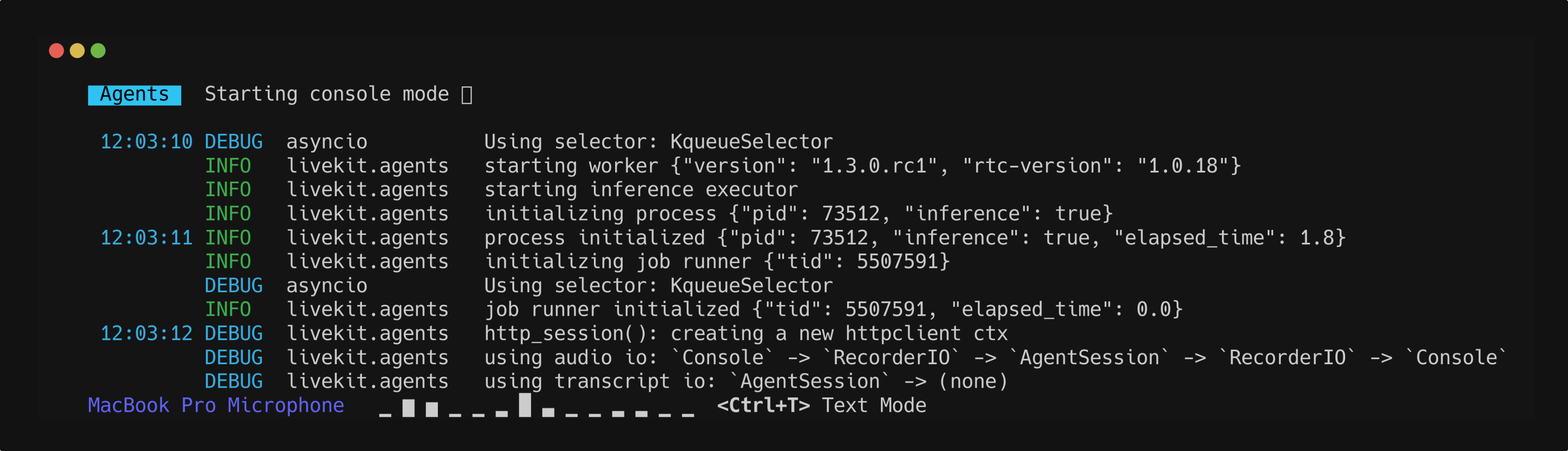

This will start the agent in console where you can directly speak with the agent in the terminal and hear responses in Murf’s natural voice.

Configuration

The murf.TTS class provides extensive configuration options to customize the voice output according to your needs.

TTS Parameters Reference

Complete Example with Custom Configuration

Here’s a more advanced example showing how to customize the Murf TTS configuration with metrics and error handling:

💡 Try it out: For complete working examples and deployment guides, check out the LiveKit Agents documentation. You can use the examples above as a starting point to build your own voice agents with Murf TTS.

Features

The Murf TTS integration for LiveKit Agents provides a comprehensive set of features for building voice applications:

- High-Quality Voice Synthesis: Leverage Murf’s advanced TTS technology with access to over 150 voices across 35+ languages

- Real-time Streaming: WebSocket-based streaming for low-latency audio generation, perfect for interactive conversations

- Voice Customization: Control voice style, rate and pitch to match your application’s needs

- Multi-Language Support: Multiple languages and locales with native speaker quality

- Agent Framework Integration: Seamless integration with LiveKit’s Agent framework for building conversational AI

- Flexible Configuration: Comprehensive audio format and quality options including sample rate, channel type, and output formats

Available Voices

Support

If you encounter any issues or have questions about the integration:

- Email: support@murf.ai

- Website: Murf API

- Documentation: Murf API Documentation

- Issues: GitHub Issues

Contributing

Contributions to the integration are welcome! If you’d like to contribute, please feel free to submit a Pull Request on the GitHub repository.

License

This project is licensed under the MIT License. See the LICENSE file for details.