WebSockets

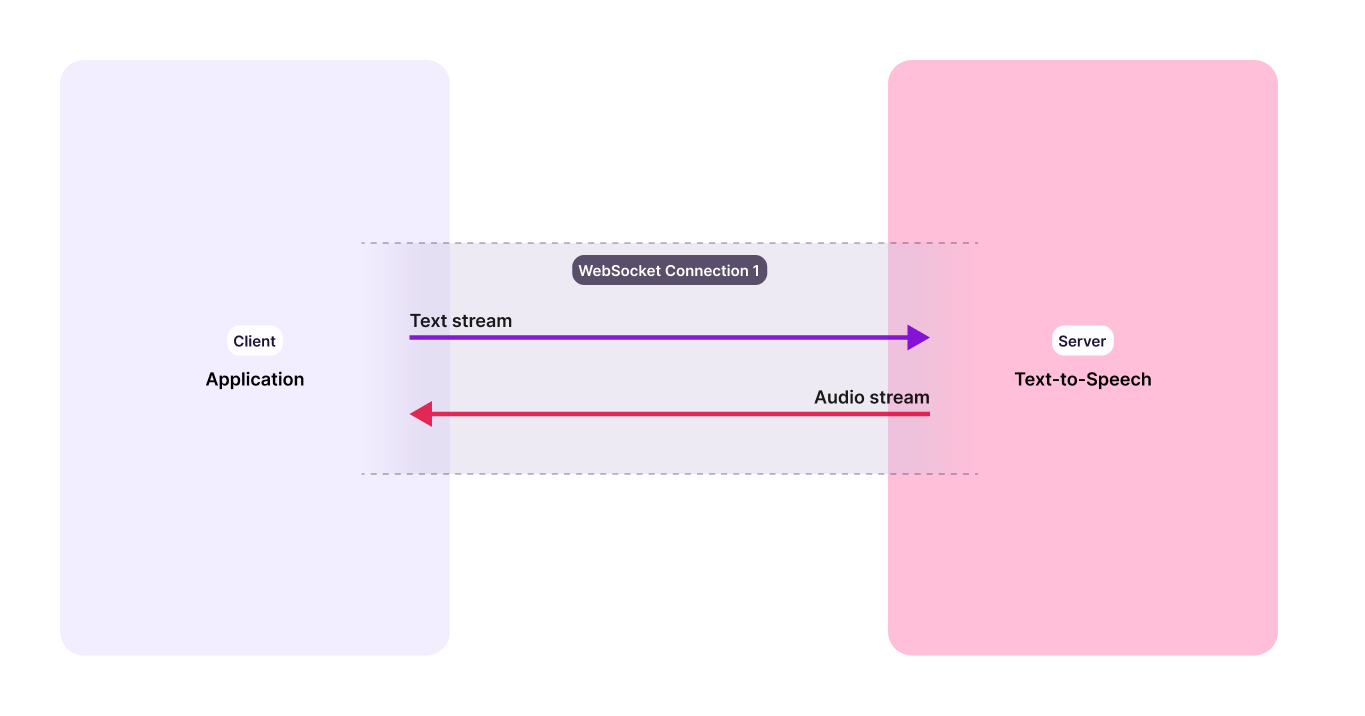

Murf TTS API supports WebSocket streaming, enabling low-latency, bidirectional communication over a persistent connection. It’s designed for building responsive voice experiences like interactive voice agents, live conversations, and other real-time applications.

New (Beta): Pass model = FALCON to use our Falcon model in text-to-speech

streaming endpoints, designed for ultra-low latency (~130 ms).

With a single WebSocket connection, you can stream text input and receive synthesized audio continuously, without the overhead of repeated HTTP requests. This makes it ideal for use cases where your application sends or receives text in chunks and needs real-time audio to deliver a smooth, conversational experience.

Quickstart

This guide walks you through setting up and making your first WebSocket streaming request.

Getting Started

Generate an API key here. Store the key in a secure location, as you’ll need it to authenticate your requests. You can optionally save the key as an environment variable in your terminal.

$ # Export an environment variable on macOS or Linux systems $ export MURF_API_KEY="your_api_key_here"

Install required packages

This guide uses the websockets and pyaudio Python packages. The websockets package is essential for the core functionality.

Note:

pyaudiois used in this quickstart guide to demonstrate playing the audio received from the WebSocket. However, it is not required to use Murf WebSockets if you have a different method for handling or playing the audio stream.

pyaudio depends on PortAudio, you may need to install it first.

Installing PortAudio (for PyAudio)

PyAudio depends on PortAudio, a cross-platform audio I/O library. You may need to install PortAudio separately if it’s not already on your system.

macOS

Linux (Debian/Ubuntu)

Windows

$ brew install portaudio

Once you have installed PortAudio, you can install the required Python packages using the following command:

$ pip install websockets pyaudio

Streaming Text and Playing Synthesized Audio

1 import asyncio 2 import websockets 3 import json 4 import base64 5 import pyaudio 6 # import os 7 8 9 API_KEY = "YOUR_API_KEY" # Or use os.getenv("MURF_API_KEY") if you have set the API key as an environment variable 10 WS_URL = "wss://global.api.murf.ai/v1/speech/stream-input" 11 PARAGRAPH = "With a single WebSocket connection, you can stream text input and receive synthesized audio continuously, without the overhead of repeated HTTP requests. This makes it ideal for use cases where your application sends or receives text in chunks and needs real-time audio to deliver a smooth, conversational experience" 12 13 # Audio format settings (must match your API output) 14 SAMPLE_RATE = 24000 15 CHANNELS = 1 16 FORMAT = pyaudio.paInt16 17 18 async def tts_stream(): 19 async with websockets.connect( 20 f"{WS_URL}?api-key={API_KEY}&model=FALCON&sample_rate=24000&channel_type=MONO&format=WAV" 21 ) as ws: 22 # Send voice config first (optional) 23 voice_config_msg = { 24 "voice_config": { 25 "voiceId": "Matthew", 26 "multiNativeLocale":"en-US", 27 "style": "Conversation", 28 "rate": 0, 29 "pitch": 0, 30 "variation": 1 31 } 32 } 33 print(f'Sending payload : {voice_config_msg}') 34 await ws.send(json.dumps(voice_config_msg)) 35 36 # Send text in one go (or chunk if you want streaming) 37 text_msg = { 38 "text": PARAGRAPH, 39 "end" : True # This will close the context. So you can re-run and concurrency is available. 40 } 41 print(f'Sending payload : {text_msg}') 42 await ws.send(json.dumps(text_msg)) 43 44 # Setup audio stream 45 pa = pyaudio.PyAudio() 46 stream = pa.open(format=FORMAT, channels=CHANNELS, rate=SAMPLE_RATE, output=True) 47 48 first_chunk = True 49 try: 50 while True: 51 response = await ws.recv() 52 data = json.loads(response) 53 print(f'Received data: {data}') 54 if "audio" in data: 55 audio_bytes = base64.b64decode(data["audio"]) 56 # Skip the first 44 bytes (WAV header) only for the first chunk 57 if first_chunk and len(audio_bytes) > 44: 58 audio_bytes = audio_bytes[44:] 59 first_chunk = False 60 stream.write(audio_bytes) 61 if data.get("final"): 62 break 63 finally: 64 stream.stop_stream() 65 stream.close() 66 pa.terminate() 67 68 if __name__ == "__main__": 69 asyncio.run(tts_stream())

Falcon Supported Voices

English - US & Canada

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Alicia | en-US (English - US & Canada), ta-IN (Tamil - India), ml-IN (Malayalam - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), tr-TR (Turkish - Turkey), pa-IN (Punjabi - India) | Conversation |

| Alina | en-US (English - US & Canada) | Conversation |

| Amara | en-US (English - US & Canada), fr-FR (French - France), it-IT (Italian - Italy), fr-CA (French - Canada) | Conversation |

| Angela | en-US (English - US & Canada) | Conversation |

| Caleb | en-US (English - US & Canada) | Conversation |

| Daisy | en-US (English - US & Canada) | Conversation |

| Delilah | en-US (English - US & Canada) | Conversation |

| Ken | en-US (English - US & Canada) | Conversation |

| Matthew | en-US (English - US & Canada) | Conversation |

| River | en-US (English - US & Canada) | Conversation |

| Ronnie | en-US (English - US & Canada), en-IN (English - India), te-IN (Telugu - India), ml-IN (Malayalam - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), ro-RO (Romanian - Romania) | Conversation |

| Zion | en-US (English - US & Canada), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

English - UK

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Finley | en-UK (English - UK) | Conversation |

| Hazel | en-UK (English - UK) | Conversation |

| Ruby | en-UK (English - UK) | Conversation |

English - India

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Anisha | en-IN (English - India) | Conversation |

| Anusha | en-IN (English - India) | Conversation |

| Nikhil | en-IN (English - India) | Conversation |

| Ronnie | en-IN (English - India), en-US (English - US & Canada), te-IN (Telugu - India), ml-IN (Malayalam - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), ro-RO (Romanian - Romania) | Conversation |

| Samar | en-IN (English - India) | Conversation |

| Tanushree | en-IN (English - India) | Conversation |

English - Australia

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Kylie | en-AU (English - Australia) | Conversation |

| Leyton | en-AU (English - Australia) | Conversation |

French - France

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Amara | fr-FR (French - France), en-US (English - US & Canada), it-IT (Italian - Italy), fr-CA (French - Canada) | Conversation |

| Axel | fr-FR (French - France) | Conversation |

| Guillaume | fr-FR (French - France) | Conversation |

French - Canada

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Alexis | fr-CA (French - Canada) | Conversation |

| Amara | fr-CA (French - Canada), en-US (English - US & Canada), fr-FR (French - France), it-IT (Italian - Italy) | Conversation |

German - Germany

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Björn | de-DE (German - Germany) | Conversation |

| Erna | de-DE (German - Germany) | Conversation |

| Josephine | de-DE (German - Germany) | Conversation |

| Lara | de-DE (German - Germany) | Conversation |

| Lia | de-DE (German - Germany), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Matthias | de-DE (German - Germany) | Conversation |

| Ralf | de-DE (German - Germany) | Conversation |

Spanish - Mexico

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Alejandro | es-MX (Spanish - Mexico) | Conversation |

| Carlos | es-MX (Spanish - Mexico) | Conversation |

| Luisa | es-MX (Spanish - Mexico) | Conversation |

| Valeria | es-MX (Spanish - Mexico) | Conversation |

Spanish - Spain

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Carla | es-ES (Spanish - Spain) | Conversation |

| Javier | es-ES (Spanish - Spain) | Conversation |

Italian - Italy

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Amara | it-IT (Italian - Italy), en-US (English - US & Canada), fr-FR (French - France), fr-CA (French - Canada) | Conversation |

| Angelo | it-IT (Italian - Italy) | Conversation |

| Giulia | it-IT (Italian - Italy) | Conversation |

| Ronnie | it-IT (Italian - Italy), en-IN (English - India), en-US (English - US & Canada), te-IN (Telugu - India), ml-IN (Malayalam - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), ro-RO (Romanian - Romania) | Conversation |

| Zion | it-IT (Italian - Italy), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Portuguese - Brazil

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Benício | pt-BR (Portuguese - Brazil) | Conversation |

| Eloa | pt-BR (Portuguese - Brazil) | Conversation |

| Gustavo | pt-BR (Portuguese - Brazil) | Conversation |

| Heitor | pt-BR (Portuguese - Brazil) | Conversation |

| Isadora | pt-BR (Portuguese - Brazil) | Conversation |

| Silvio | pt-BR (Portuguese - Brazil) | Conversation |

| Yago | pt-BR (Portuguese - Brazil) | Conversation |

Mandarin - China

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Baolin | zh-CN (Mandarin - China) | Conversation |

| Jiao | zh-CN (Mandarin - China) | Conversation |

| Tao | zh-CN (Mandarin - China) | Conversation |

| Wei | zh-CN (Mandarin - China) | Conversation |

| Yuxan | zh-CN (Mandarin - China) | Conversation |

| Zhang | zh-CN (Mandarin - China) | Conversation |

Dutch - Netherlands

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Dirk | nl-NL (Dutch - Netherlands) | Conversation |

| Famke | nl-NL (Dutch - Netherlands) | Conversation |

| Merel | nl-NL (Dutch - Netherlands) | Conversation |

Hindi - India

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Aman | hi-IN (Hindi - India) | Conversation |

| Karan | hi-IN (Hindi - India) | Conversation |

| Khyati | hi-IN (Hindi - India) | Conversation |

| Namrita | hi-IN (Hindi - India) | Conversation |

| Sunaina | hi-IN (Hindi - India) | Conversation |

| Zion | hi-IN (Hindi - India), bn-IN (Bangla - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Korean - Korea

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| JangMi | ko-KR (Korean - Korea) | Conversation |

| Jong-su | ko-KR (Korean - Korea) | Conversation |

| SangHoon | ko-KR (Korean - Korea) | Conversation |

Tamil - India

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Alicia | ta-IN (Tamil - India), en-US (English - US & Canada), ml-IN (Malayalam - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), tr-TR (Turkish - Turkey), pa-IN (Punjabi - India) | Conversation |

| Murali | ta-IN (Tamil - India) | Conversation |

| Zion | ta-IN (Tamil - India), bn-IN (Bangla - India), hi-IN (Hindi - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Polish - Poland

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Blazej | pl-PL (Polish - Poland) | Conversation |

| Jacek | pl-PL (Polish - Poland) | Conversation |

| Kasia | pl-PL (Polish - Poland) | Conversation |

Bangla - India

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Abhik | bn-IN (Bangla - India) | Conversation |

| Lia | bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Zion | bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Japanese - Japan

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Denki | ja-JP (Japanese - Japan) | Conversation |

| Kenji | ja-JP (Japanese - Japan) | Conversation |

| Kimi | ja-JP (Japanese - Japan) | Conversation |

Turkish - Turkey

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Alicia | tr-TR (Turkish - Turkey), ta-IN (Tamil - India), en-US (English - US & Canada), ml-IN (Malayalam - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), pa-IN (Punjabi - India) | Conversation |

| Lia | tr-TR (Turkish - Turkey), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Zion | tr-TR (Turkish - Turkey), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Indonesian - Indonesia

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Alicia | id-ID (Indonesian - Indonesia), ta-IN (Tamil - India), en-US (English - US & Canada), ml-IN (Malayalam - India), mr-IN (Marathi - India), tl-PH (Tagalog - Philippines), tr-TR (Turkish - Turkey), pa-IN (Punjabi - India) | Conversation |

| Lia | id-ID (Indonesian - Indonesia), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Ronnie | id-ID (Indonesian - Indonesia), en-IN (English - India), en-US (English - US & Canada), te-IN (Telugu - India), ml-IN (Malayalam - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), it-IT (Italian - Italy), tl-PH (Tagalog - Philippines), ro-RO (Romanian - Romania) | Conversation |

| Zion | id-ID (Indonesian - Indonesia), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Croatian - Croatia

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Lia | hr-HR (Croatian - Croatia), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Zion | hr-HR (Croatian - Croatia), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Greek - Greece

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Lia | el-GR (Greek - Greece), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Stavros | el-GR (Greek - Greece) | Conversation |

Romanian - Romania

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Lia | ro-RO (Romanian - Romania), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Ronnie | ro-RO (Romanian - Romania), en-IN (English - India), en-US (English - US & Canada), te-IN (Telugu - India), ml-IN (Malayalam - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines) | Conversation |

| Zion | ro-RO (Romanian - Romania), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Slovak - Slovakia

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Lia | sk-SK (Slovak - Slovakia), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Zion | sk-SK (Slovak - Slovakia), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Bulgarian - Bulgaria

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Lia | bg-BG (Bulgarian - Bulgaria), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Zion | bg-BG (Bulgarian - Bulgaria), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Czech - Czechia

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Lia | cs-CZ (Czech - Czechia), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Zion | cs-CZ (Czech - Czechia), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Gujarati - India

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Lia | gu-IN (Gujarati - India), bn-IN (Bangla - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Ronnie | gu-IN (Gujarati - India), en-IN (English - India), en-US (English - US & Canada), te-IN (Telugu - India), ml-IN (Malayalam - India), mr-IN (Marathi - India), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), ro-RO (Romanian - Romania) | Conversation |

Kannada - India

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Julia | kn-IN (Kannada - India), en-US | Conversation |

| Maverick | kn-IN (Kannada - India), en-US | Conversation |

| Rajesh | kn-IN (Kannada - India) | Conversation |

Malayalam - India

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Alicia | ml-IN (Malayalam - India), ta-IN (Tamil - India), en-US (English - US & Canada), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), tr-TR (Turkish - Turkey), pa-IN (Punjabi - India) | Conversation |

| Ronnie | ml-IN (Malayalam - India), en-IN (English - India), en-US (English - US & Canada), te-IN (Telugu - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), ro-RO (Romanian - Romania) | Conversation |

Marathi - India

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Alicia | mr-IN (Marathi - India), ta-IN (Tamil - India), en-US (English - US & Canada), ml-IN (Malayalam - India), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), tr-TR (Turkish - Turkey), pa-IN (Punjabi - India) | Conversation |

| Lia | mr-IN (Marathi - India), bn-IN (Bangla - India), gu-IN (Gujarati - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Ronnie | mr-IN (Marathi - India), en-IN (English - India), en-US (English - US & Canada), te-IN (Telugu - India), ml-IN (Malayalam - India), gu-IN (Gujarati - India), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), ro-RO (Romanian - Romania) | Conversation |

| Rujuta | mr-IN (Marathi - India) | Conversation |

Malay - Malaysia

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Lia | ms-MY (Malay - Malaysia), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Zion | ms-MY (Malay - Malaysia), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Punjabi - India

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Alicia | pa-IN (Punjabi - India), ta-IN (Tamil - India), en-US (English - US & Canada), ml-IN (Malayalam - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), tr-TR (Turkish - Turkey) | Conversation |

| Harman | pa-IN (Punjabi - India) | Conversation |

| Lia | pa-IN (Punjabi - India), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Zion | pa-IN (Punjabi - India), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Swedish - Sweden

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Lia | sv-SE (Swedish - Sweden), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam) | Conversation |

| Zion | sv-SE (Swedish - Sweden), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam) | Conversation |

Telugu - India

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Josie | te-IN (Telugu - India), en-US | Conversation |

| Ronnie | te-IN (Telugu - India), en-IN (English - India), en-US (English - US & Canada), ml-IN (Malayalam - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), tl-PH (Tagalog - Philippines), ro-RO (Romanian - Romania) | Conversation |

Thai - Thailand

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Lia | th-TH (Thai - Thailand), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Zion | th-TH (Thai - Thailand), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Tagalog - Philippines

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Alicia | tl-PH (Tagalog - Philippines), ta-IN (Tamil - India), en-US (English - US & Canada), ml-IN (Malayalam - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), tr-TR (Turkish - Turkey), pa-IN (Punjabi - India) | Conversation |

| Ronnie | tl-PH (Tagalog - Philippines), en-IN (English - India), en-US (English - US & Canada), te-IN (Telugu - India), ml-IN (Malayalam - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ro-RO (Romanian - Romania) | Conversation |

| Zion | tl-PH (Tagalog - Philippines), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Vietnamese - Vietnam

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Lia | vi-VN (Vietnamese - Vietnam), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), el-GR (Greek - Greece), th-TH (Thai - Thailand), sv-SE (Swedish - Sweden) | Conversation |

| Zion | vi-VN (Vietnamese - Vietnam), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), fi-FI (Finnish - Finland), th-TH (Thai - Thailand), sv-SE (Swedish - Sweden) | Conversation |

Finnish - Finland

| Voice ID | Supported Locales | Voice Styles |

|---|---|---|

| Lia | fi-FI (Finnish - Finland), bn-IN (Bangla - India), gu-IN (Gujarati - India), mr-IN (Marathi - India), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tr-TR (Turkish - Turkey), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), de-DE (German - Germany), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), el-GR (Greek - Greece), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

| Zion | fi-FI (Finnish - Finland), bn-IN (Bangla - India), hi-IN (Hindi - India), ta-IN (Tamil - India), en-US (English - US & Canada), it-IT (Italian - Italy), id-ID (Indonesian - Indonesia), ms-MY (Malay - Malaysia), tl-PH (Tagalog - Philippines), hr-HR (Croatian - Croatia), sk-SK (Slovak - Slovakia), pa-IN (Punjabi - India), tr-TR (Turkish - Turkey), ro-RO (Romanian - Romania), bg-BG (Bulgarian - Bulgaria), cs-CZ (Czech - Czechia), th-TH (Thai - Thailand), vi-VN (Vietnamese - Vietnam), sv-SE (Swedish - Sweden) | Conversation |

Available Regions

Use the region closest to your users for the lowest latency.

| Region (City/Area) | Endpoint |

|---|---|

| Global (Routes to the nearest server) | https://global.api.murf.ai/v1/speech/stream |

| US-East | https://us-east.api.murf.ai/v1/speech/stream |

| US-West | https://us-west.api.murf.ai/v1/speech/stream |

| India | https://in.api.murf.ai/v1/speech/stream |

| Canada | https://ca.api.murf.ai/v1/speech/stream |

| South Korea | https://kr.api.murf.ai/v1/speech/stream |

| UAE | https://me.api.murf.ai/v1/speech/stream |

| Japan | https://jp.api.murf.ai/v1/speech/stream |

| Australia | https://au.api.murf.ai/v1/speech/stream |

| EU (Central) | https://eu-central.api.murf.ai/v1/speech/stream |

| UK | https://uk.api.murf.ai/v1/speech/stream |

| South America (São Paulo) | https://sa-east.api.murf.ai/v1/speech/stream |

The Global Router automatically picks the nearest region automatically.The concurrency limit is 15 for the US-East region and 2 for all other regions. To get higher concurrency, use the US-East endpoint directly or contact us to increase limits for regional endpoints.

Best Practices

Following are some best practices for using the WebSocket streaming API:

- Once connected, the session remains active as long as it is in use and will automatically close after 3 minutes of inactivity.

- You can maintain up to 10X your streaming concurrency limit in WebSocket connections, as per your plan’s rate limits.

- For the lowest latency, prefer Falcon voices by setting model =

FALCON.

Next Steps

Use a unique identifier to track a specific TTS request, ensuring continuity in the conversation.

Fine-tune text buffering to balance audio quality and Time to First Byte (TTFB).

FAQs

How is WebSocket streaming different from HTTP streaming in the Murf TTS API?

WebSocket allows you to stream input text and receive audio over the same persistent connection, making it truly bidirectional. In contrast, HTTP streaming is one-way, you send the full text once and receive audio while it is being generated. WebSocket is better for real-time, interactive use cases where text arrives in parts.

What format is the audio received over WebSocket?

The audio is streamed as a sequence of base64-encoded strings, with each message containing a chunk of the overall audio

After how long will the WebSocket connection close due to inactivity?

The WebSocket connection will automatically close after 3 minutes of inactivity.

What features can I use with the WebSocket Streaming API?

You can control style, speed, pitch and pauses.

How do I enable Falcon (Beta) over WebSockets, and who should use it?

Add model = FALCON to your WebSocket connection query (or request

parameters). Falcon is optimized for ultra-low latency (~130 ms) and is

ideal for interactive agents, live support, gaming, tutoring, and other

real-time experiences where fast turn-taking matters.